| Issue |

A&A

Volume 686, June 2024

|

|

|---|---|---|

| Article Number | A130 | |

| Number of page(s) | 8 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202349024 | |

| Published online | 05 June 2024 | |

A generalizable method for estimating meteor shower false positives★

Institut Mécanique Céleste et de Calcul des Éphémérides, Observatoire de Paris, PSL,

75014

Paris,

France

e-mail: patrick.shober@obspm.fr

Received:

19

December

2023

Accepted:

29

February

2024

Context. The determination of meteor shower or parent body associations is inherently a statistical problem. Traditional methods, primarily the similarity discriminants, have limitations, particularly in handling the increasing volume and complexity of meteoroid orbit data.

Aims. We introduce a new more statistically robust and generalizable method for estimating false positive detections in meteor shower identification, leveraging kernel density estimation (KDE). The method is applied to fireball data from the European Fireball Network, a comprehensive photographic fireball observation network established in 1963 for the detailed monitoring and analysis of fireballs across central Europe

Methods. Utilizing a dataset of 824 fireballs observed by the European Fireball Network, we applied a multivariate Gaussian kernel within KDE and Z-score data normalization. Our method analyzes the parameter space of meteoroid orbits and geocentric impact characteristics, focusing on four different similarity discriminants: DSH, D′, DH, and DN.

Results. The KDE methodology consistently converges toward a true established shower-associated fireball rate within the EFN dataset of 18–25% for all criteria. This indicates that the approach provides a more statistically robust estimate of the shower-associated component.

Conclusions. Our findings highlight the potential of KDE combined with appropriate data normalization in enhancing the accuracy and reliability of meteor shower analysis. This method addresses the existing challenges posed by traditional similarity discriminants and offers a versatile solution adaptable to varying datasets and parameters.

Key words: methods: data analysis / methods: statistical / comets: general / meteorites, meteors, meteoroids / minor planets, asteroids: general

The EFN fireball data used in this study is available in the publication by Borovička et al. (2022). The code developed for this study is available at https://zenodo.org/records/10406556.

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

The identification and analysis of meteor showers, distinct from the sporadic meteoroid background, is a pivotal aspect of meteor science. The International Astronomical Union (IAU) maintains a comprehensive list of these meteor showers and their associated parent bodies. This list is continuously updated with new entries derived from ongoing meteoroid orbit surveys, which emphasizes the dynamic nature of this field (Jenniskens et al. 2009, 2016; Kornoš et al. 2014; Rudawska et al. 2015). The process of distinguishing these meteor showers from the background noise hinges on the application of a D-criterion. Initially formulated by Southworth & Hawkins (1963), the DSH is calculated from two sets of orbital elements. This parameter, along with its derivatives, increases with decreasing similarity and is sometimes referred to as a dissimilarity parameter. Southworth & Hawkins (1963) designed their parameter for ease of computation and acknowledged the possibility of valid alternative formulations. One known flaw of this parameter is the physical unit inconsistency (Drummond 1980, 1981).

Variations such as the Drummond (1981) D-parameter have been developed. The orbital similarity parameter of Drummond (1981), similar to the version of Southworth & Hawkins (1963), balances the weighting of its four terms and uses angular distances rather than chords. A comparison by Jopek (1993) highlighted the overdependence of the Southworth & Hawkins (1963) parameter on perihelion distance and the Drummond (1981) parameter on eccentricity, leading to the proposal of a hybrid parameter, DH. These criteria all quantify the similarity between meteoroid orbits, with lower D-values indicating greater resemblance. Traditionally, a threshold value of DSH < 0.2 was employed, but lower values have since been used in recent studies (Jenniskens et al. 2009, 2016; Rudawska et al. 2015; Kornoš et al. 2014). In addition, Valsecchi et al. (1999) introduced a new distance function based on four geocentric quantities directly linked to observations. This methodology differs from the conventional orbital similarity criteria, for example the Southworth & Hawkins (1963) criterion, by focusing on variables that are near-invariant with respect to the principal secular perturbation affecting meteoroid orbits. The introduction of this new approach offers a more observationally direct and more accurate means for classifying meteoroid streams.

Despite its widespread use, the validity of the D-criterion as an absolute measure has been questioned, particularly its reliance on the sample size and the potential for misidentifying random associations as genuine meteor showers (Pauls & Gladman 2005; Koten et al. 2014; Egal et al. 2017; Vida et al. 2018). Given the rapid expansion of publicly available meteor orbit data, primarily from video meteor networks, there is a need for more robust methods to assess the statistical significance of the association between meteor showers and their proposed parent bodies. This need is further underscored by the increasing realization that meteoroid orbital elements are often more uncertain than previously thought (Egal et al. 2017; Vida et al. 2018). Notably, Moorhead (2016) and Sugar et al. (2017) have contributed significantly to the detection of meteor showers by employing the density-based spatial clustering algorithm (DBSCAN) for large datasets of meteor trajectories and orbits observed by the NASA All-Sky Fireball Network and the Southern Ontario Meteor Network. These studies underscore the necessity for more robust methods in assessing meteor shower associations, especially considering the uncertainties and varying characteristics of meteoroid orbits.

In response to these challenges, this study we present here introduces a new method for estimating D-criterion false positives, centered around kernel density estimation (KDE), a non-parametric way to estimate the probability density function (PDF) of a random variable. There are advantages to using KDE rather than traditional histograms, due to its continuity and flexibility in dealing with various data distributions. Our approach addresses the limitations inherent in traditional methods that rely heavily on the similarity discriminant, a parameter that has been historically used but often leads to ambiguous results. In our approach here, we employ a multivariate Gaussian kernel to model the distribution of sporadic meteoroid orbits. This kernel is defined by a covariance matrix that simplifies the complex relationships between orbital elements into a manageable form. We then generate synthetic samples from this KDE to estimate the sporadic background, subsequently enabling us to assess the likelihood of false positive detections in meteor shower identification. This methodology allows for a statistically sound examination of potential false associations between observations and meteor showers or parent bodies, aiming to address the shortcomings of previous methods and provide a more reliable and generalized framework for meteor shower analysis.

2 Method

The current state of meteor shower analysis, primarily governed by the similarity discriminant, presents several limitations. The discriminants, while useful, often lead to ambiguous results, especially when the underlying sample size is not adequately considered. This ambiguity is evident in the extensive list of potential parent bodies on the working list of meteor showers1, many of which may be spurious associations. Furthermore, the increasing volume of meteoroid orbit data, accompanied by significant uncertainties in their orbital elements, necessitates a more comprehensive method to distinguish between genuine meteor showers and random groupings within the sporadic background (Egal et al. 2017; Vida et al. 2017).

To address these challenges, our study employs KDE as a foundational tool for analyzing the orbital distributions of meteoroids. The KDE’s non-parametric nature allows for flexible and unbiased estimation of density functions, making it particularly suited for handling the diverse and often complex distributions encountered in meteor shower analysis (Seaman & Powell 1996). By synthesizing large samples from the KDE and comparing them against the observed meteoroid orbits, we can effectively gauge the probability of random coincidences, thereby refining our understanding of meteor shower associations.

Our methodology can be summarized as follows:

Data collection and preparation: gather a dataset of meteor observations, concentrating on the orbital and geocentric parameters necessary to calculate the DSH, D′, DH, and DN similarity discriminants. Use Z-score normalization on data, to prepare for KDE.

KDE: apply KDE to the normalized data to estimate the PDF of the sporadic meteor background. This involves selecting an appropriate kernel (e.g., Gaussian) and bandwidth, which determines the level of smoothing.

Calculation of dissimilarity criteria: compute various discriminant values (e.g., DSH, D′, DH, DN) for the dataset. These criteria assess the similarity between observed meteoroid orbits and known meteor showers.

False positive estimation: randomly draw Ndataset synthetic samples from the PDF for the sporadic population, estimated by the KDE, and un-normalize the parameters for discriminant value calculation. Determine the rate of false positives by calculating the discriminant values for the synthetic sporadic samples, and finding how many have low D-values by chance.

True positive identification: subtract the estimated false positives from the total associations with the observed dataset to determine the true positive total, providing a clearer picture of genuine meteor shower associations.

2.1 Data

In this paper we use the dataset of 824 fireballs observed by the European Fireball Network (EFN) (Borovička et al. 2022). The EFN, established in 1963, represents a pioneering effort in the long-term monitoring of fireballs using a network of wide-angle and all-sky cameras. Initially set up in Czechoslovakia and Germany, it has expanded significantly in terms of geographic coverage and technological advancement. The network is primarily based in Central Europe, and has undergone several modernizations over the decades, including the transition from mirror to fish-eye cameras and, more recently, the adoption of digital autonomous observatories. These changes have significantly enhanced the network’s capabilities in detecting and analyzing fireballs. The network’s data have contributed to the recovery of several meteorites and have provided valuable insights into the physical and orbital properties of meteoroids.

2.2 Data processing

For this study, we employed rigorous data normalization and a KDE approach to analyze and estimate the sporadic background of meteor observations, no matter the variable of interest. In order to utilize a KDE for this purpose, the data needs to be normalized in order to avoid over- or under-smoothing. It ensures that all features in a dataset contribute equally to the analysis. Without normalization, features with larger scales can disproportionately influence the KDE, leading to biased results. For example, if you want to fit a KDE to orbital data, the range of the angular elements will either be 0–180° or 0–360°, whereas the eccentricity will only vary between 0.0 and 1.0. Without some normalization the semimajor axis and eccentricity features in the dataset would likely be over-smoothed.

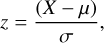

In this study, we follow a standard practice of normalization by using a Z-score normalization (Glantz et al. 2001). Z-score normalization, also known as standard score normalization, is a statistical method used to standardize the features of a dataset. It is defined by the formula

where X is the original data value, μ is the mean of the data, and σ is the standard deviation. In this process, each feature value is transformed by subtracting the mean of the feature and then dividing by its standard deviation. This normalization process facilitates the conversion of each feature to a scale with a mean of 0 and a standard deviation of 1, thereby rectifying the issue of disparate scales that could lead to over- or under-smoothing in the KDE process. Such standardization is indispensable in our analysis, given the diverse range and nature of the orbital and geocentric parameters under consideration. This transformation is applied individually to each variable and does not alter the relative positioning of individual data points within each feature. Importantly, because Z-score normalization adjusts each feature individually by its own mean and standard deviation, it does not inherently disrupt the correlation structure between features. Furthermore, the reversible nature of Z-score normalization permits the re-scaling of the KDE output to the original data scale, enabling the interpretation and application of the results within the authentic context of the observed meteoroid orbits. Furthermore, it is imperative to acknowledge that while Z-score normalization aids in harmonizing the scale across parameters, it does not alter the underlying distributional characteristics of the data, such as skewness or kurtosis. This initial phase of preprocessing the data was implemented using the StandardScaler method from the scikit-learn library (Pedregosa et al. 2011).

Within this study, we use a normalization process to allow the use of a single bandwidth parameter to generate uniform smoothing across dimensions. However, the methodology proposed by Vida et al. (2017), which introduces the use of a diagonal bandwidth matrix (Eq. (12) in Vida et al. 2017), represents another alternative that merits consideration. This other approach allows for the individual adjustment of bandwidths for each parameter, tailored to their specific distributional properties, thereby offering a more manual alternative to method implemented here. Thus, if someone wants to smooth certain features more than others, this approach should be utilized instead. However, for demonstrating how smoothing generally effects the shower false positive estimate, our methodology, which allows for the use of one bandwidth value, is ideal.

2.3 KDE

Post-normalization, we implemented the KDE, a non-parametric way to estimate the PDF of a random variable, using the KernelDensity class from scikit-learn. KDE is particularly beneficial in elucidating the underlying structure of the data, especially when the form of the distribution is unknown (Silverman 2018). A KDE is a non-parametric method used to estimate the PDF of a random variable based on a data sample. It works by placing a kernel, typically a Gaussian function, on each data point and then summing these kernels to create a smooth estimate of the underlying PDF. This method is particularly useful for approximating unknown distributions and accommodating the multi-modality often present in sparse data (Silverman 2018). Additionally, KDE is known for its flexibility in accurately estimating densities of various shapes, provided that the level of smoothing is appropriately selected (Seaman & Powell 1996).

Within this study, we utilize a Gaussian kernel in order to provide enough smoothing to estimate the sporadic meteor component from the dataset. However, before fitting the KDE to the data, all established showers were identified and removed in order to ensure that the false positive rate was not overestimated. A KDE with a sufficiently large bandwidth could be used to effectively smooth away all of the shower-related features; however, if the shower component is significant this reduces the accuracy of the sporadic distribution. If the shower component is in fact dominating the dataset under question, as seen in many datasets (Table 1; Jopek & Froeschlé 1997), this method over-estimates the false positive rate. In this study we use a dataset of 824 EFN fireballs, of which up to 45% were estimated to be shower-associated (Borovička et al. 2022). However, Borovička et al. (2022) stated that the shower membership listed is obvious for well-defined major showers, but many of the minor showers might not even be real. We chose to remove the established major shower components, removing all fireballs with a DN < 0.1 with an established shower2. If the shower-component of a dataset is very large, the well-established showers need to be removed; however, this must be done carefully as an over-removal and under-smoothing of the KDE will conversely result in an under-estimate of the meteor shower false positive rate.

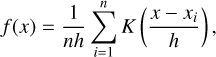

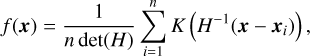

The KDE for a univariate dataset is defined as

where f(x) is the estimated density at point x; n is the number of data points; xi are the data points; h is the bandwidth, a smoothing parameter; and K is the kernel, a non-negative function that integrates to one and has mean zero. The choice of the kernel function K and the bandwidth h are crucial.

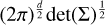

For multivariate data, the KDE becomes

where x and xi are now vectors; H is the bandwidth matrix, generalizing the smoothing parameter to multiple dimensions; and det(H) is the determinant of H, normalizing the kernel.

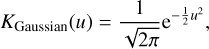

The Gaussian kernel KGaussian used in this study is defined as

where K(u) is the Gaussian kernel; u is the standardized variable, calculated as  , where x is the evaluation point, xi is a data point, and h is the bandwidth; e is the base of the natural logarithm. The kernel integrates to 1 over its domain, conforming to the properties of a PDF.

, where x is the evaluation point, xi is a data point, and h is the bandwidth; e is the base of the natural logarithm. The kernel integrates to 1 over its domain, conforming to the properties of a PDF.

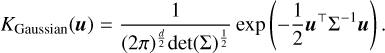

Instead, in the multivariate case

Here K(u) represents the multivariate Gaussian kernel; u is the standardized variable vector, calculated as H−1(x − xi), where x is the evaluation point vector, xi is a data point vector, and H is the bandwidth matrix; d is the number of dimensions; ∑ is the covariance matrix, often related to the bandwidth matrix H; det(∑) is the determinant of the covariance matrix;  normalizes the kernel to ensure it integrates to 1; exp is the exponential function; u⊤ is the transpose of u; and ∑−1 is the inverse of ∑. The equations for the KDE and corresponding Gaussian kernel were taken from Hastie et al. (2009), where a more detailed description can be found.

normalizes the kernel to ensure it integrates to 1; exp is the exponential function; u⊤ is the transpose of u; and ∑−1 is the inverse of ∑. The equations for the KDE and corresponding Gaussian kernel were taken from Hastie et al. (2009), where a more detailed description can be found.

Despite its advantages, there are considerations to be made when using KDE. For instance, the level of smoothing, determined by the bandwidth parameter, must be carefully chosen to avoid under-fitting or over-fitting the data. Here we considered multiple bandwidths to demonstrate how this parameter affects the estimate of the sporadic meteor population and subsequently the meteor shower false positive rate for each similarity discriminant.

The utilization of KDE on cyclic or periodic data also warrants careful consideration, especially given the intrinsic challenges posed by such data types. Cyclic parameters, such as the angular elements in meteoroid orbital data, exhibit continuity at their boundaries, a property that conventional KDE approaches, including those predicated on linear kernels, may not adequately accommodate. This discontinuity at the boundary can lead to misleading density estimates, particularly near the edges of the cyclic range. The process of Z-score normalization, while facilitating the standardization of data scales, does not inherently resolve the cyclic nature of these parameters. Consequently, the application of KDE to unmodified cyclic data, even post-normalization, necessitates a methodological adjustment to ensure that the periodic continuity is preserved and accurately represented in the density estimation.

To address this challenge, specific strategies may be employed, such as adapting KDE with cyclic kernels or transforming cyclic data into a format that inherently respects its periodic boundaries. These adaptations are essential for capturing the true density landscape of cyclic parameters, and ensure that the estimations reflect the natural continuity and cyclic behavior inherent to such data. This consideration underscores the importance of selecting appropriate KDE configurations and transformations that align with the data’s characteristics, thereby enhancing the accuracy and relevance of the density estimates derived from our analysis. The nuanced handling of cyclic data within KDE highlights the broader theme of methodological adaptability, emphasizing the need for tailored approaches that are sensitive to the unique properties of the dataset under investigation.

2.4 Dissimilarity criteria

A similarity discriminant is a statistical measure used in astronomy to evaluate the similarity between the orbital elements of meteoroids, asteroids, or comets. It has been refined into several versions, each with unique characteristics and calculations. We detail four prominent versions of the D-value: DSH (Southworth & Hawkins 1963), D′ (Drummond 1981), DH (Jopek 1993), and DN (Valsecchi et al. 1999).

2.4.1 Southworth-Hawkins discriminant

The study by Southworth & Hawkins (1963) was the first to introduce a method for identifying meteoroid streams. Their approach was to calculate an orbital discriminant (DSH) based on the calculated pre-impact orbital elements of the meteors detected by Baker Super-Schmidt meteor cameras.

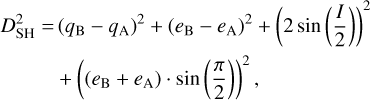

The DSH orbital similarity discriminant is defined as

where

2.4.2 Drummond discriminant

The Drummond discriminant, proposed by Drummond (1981), is also an orbital discriminant that can be used to differentiate between small bodies and meteors based on their orbital elements.

It is expressed as

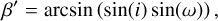

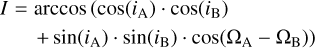

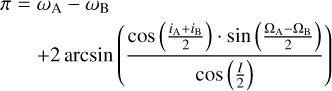

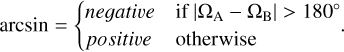

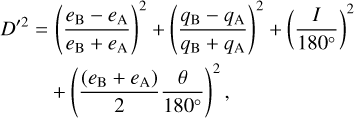

where

with

This criterion focuses more on the differences in eccentricity e and perihelion distance q between two orbits, compared to Southworth & Hawkins (1963).

2.4.3 Jopek discriminant

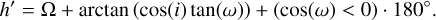

The discriminant introduced by Jopek (1993) is a more complex variant that combines elements of Southworth & Hawkins (1963) and Drummond (1981). It can be written as

2.4.4 Valsecchi discriminant

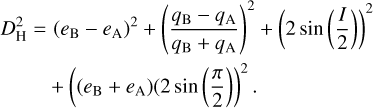

The Valsecchi discriminant, DN, developed by Valsecchi et al. (1999), takes a completely different approach: it uses four geocentric quantities directly linked to meteor observations. This diverges from the traditional discriminant values based on the osculating orbital elements at impact. The proposed approach defines the distance function in a space with dimensions equal to the number of independently measured physical quantities.

The new variables introduced are the modulus of the unperturbed geocentric velocity, U; two angles, θ and ϕ, defining the direction of U based on Öpik’s theory, and used to define the direction opposite to that from which the meteoroid is observed, considering Earth’s gravity effect; and the solar longitude of the meteoroid (λ) hitting the Earth. Valsecchi et al. (1999) recommended using cos υ instead of υ, as it is directly proportional to  (the orbital energy of the meteoroid), making it suitable for the new distance function.

(the orbital energy of the meteoroid), making it suitable for the new distance function.

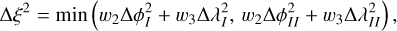

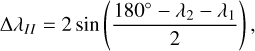

The similarity criterion, DN, is defined as

where

and w1, w2, and w3 are suitably defined weighting factors (all are set to 1.0 here). We note that ∆ξ is small if ϕ1 − ϕ2 and λ1 − λ2 are either both small or both close to 180º.

2.5 Estimating the number of false positives

To estimate the number of false positives within a given dataset for a given similarity discriminant, we start by using the KDE to estimate a PDF of the sporadic population. From this sporadic PDF, samples are randomly drawn in batches of Ndataset, where Ndataset is the total number of datapoints in the dataset (i.e., the total number of fireballs in the EFN dataset).

We employ scikit-learn’s KernelDensity class to perform random sampling from a fitted Gaussian KDE (Pedregosa et al. 2011). The sampling algorithm initiates by randomly selecting base points from the dataset used in the KDE fitting, ensuring an equitable chance of selection across data points, unless sample weights are provided, in which case selection probabilities are adjusted accordingly. Upon selecting base points, the algorithm introduces Gaussian noise to each, effectively drawing a random sample from a Gaussian distribution with a mean equal to the base point’s coordinates and a standard deviation determined by the KDE’s bandwidth parameter. Mathematically, for a base point denoted xi, a sampled point x′i is produced according to x′i = xi + N(0, h2), where N(0, h2) signifies a Gaussian noise component with mean 0 and variance h2, and h represents the bandwidth. This procedure, repeated for each base point, generates samples that reflect the density estimated by the KDE, with the bandwidth parameter serving as a critical smoothing factor, influencing the dispersion of generated samples around the base points and thereby controlling the smoothness of the estimated distribution. Ultimately, this method yields a set of points distributed according to the original dataset’s estimated PDF, as modeled by the Gaussian KDE, facilitating the exploration of continuous data distributions and leveraging the Gaussian kernel’s inherent properties for applications such as Monte Carlo simulations and synthetic data creation. More information regarding the scikit-learn’s KernelDensity class is available on GitHub3.

This process is done 100 times to get good statistics on the likelihood of a chance association for a dataset of that size, as it has been shown previously that the number of false positives changes with sample size (Southworth & Hawkins 1963). It is also important to note that this false positive estimate is a maximum value, as it considers a completely sporadic dataset of the same size. For each random sample of size Ndataset drawn from the sporadic meteor PDF, the similarity discriminant is calculated. In this study we calculate the DSH, D′, DH, and DN values for every random draw and every meteor shower in the IAU Meteor Data Center’s Established Shower list4. Assuming that the estimated PDF accurately reflects the distribution of sporadic sources within the dataset, we interpret the count of samples whose similarity discriminant values fall below a specified threshold (referred to as the D-criterion) as our estimate of false positives. This means that, within the context of our analysis, any sample from the sporadic source distribution that yields a similarity discriminant value lower than this threshold is considered a false positive. This threshold-based approach allows us to quantify the likelihood of mistakenly identifying sporadic sources as members of a significant pattern or group when in reality they are not, based on the statistical properties of the dataset under investigation. Additionally, by taking a Monte Carlo approach, uncertainty can be placed on the false positive estimate.

3 Results

Applying a KDE to fireball network observations in order to estimate the meteor shower false positives and, inherently, the true positives, shows a lot of promise. Here we applied the KDE to the observations to estimate the sporadic distribution for the variables involved in the D discriminant calculations for DSH, D′, DH, and DN. The parameters change between these D-values, but the KDE false positive method seems to provide a generalizable way of estimating the shower component despite this change.

3.1 KDE smoothing

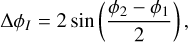

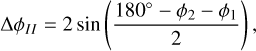

Due to our Z-score normalization of the data, we can apply the same level of smoothing to different parameters whose range and magnitudes vary significantly using one bandwidth parameter value. As seen in Figs. 1 and 2, we applied multiple KDEs with increasing bandwidth values to the observed parameters that are used to calculate the discriminants. Figure 1 compares the PDFs of the orbital parameters used to calculate DSH, D′, and DH to the observed distributions, whereas Fig. 2 does the same for the geocentric parameter used to calculate DN. We applied a KDE with four different bandwidth values [0.1, 0.25, 0.5, 1.0] in order to demonstrate the effect on the false positive estimate. As the bandwidth values increase toward 1.0, the features of the original measured distribution become less pronounced.

Although we tested values ranging up until 1.0, this bandwidth is too large and starts to remove some of the likely sporadic features in the dataset as well. Using cross-validation and grid search techniques to ascertain the optimal bandwidth for KDE with a Gaussian kernel gives a reasonable value of 0.22 for the EFN dataset. This optimal bandwidth is found through cross-validation, specifically a five-fold variant. Cross-validation involves partitioning the data into five subsets, or folds. This partitioning is key to ensuring that the evaluation of the bandwidth’s effectiveness is comprehensive and unbiased. Each subset is alternately used as a testing set while the remaining are amalgamated to form a training set. In each iteration, one of the five folds is designated as the test set, and the remaining four folds are combined to form the training set. The training set in this context refers to the subset of data on which the KDE is applied to estimate the density function. The test set is then used to evaluate the effectiveness of this density estimation. This process is iterated five times, ensuring that each subset serves once as the testing set. A grid-search approach iterates over a pre-defined range of bandwidths, in this instance from 0.1 to 1.0, segmented into 30 intervals. The performance of the KDE model for each bandwidth value is evaluated, and the optimal bandwidth is defined as the one that strikes a balance between over-smoothing, which obscures pertinent details of the data distribution, and under-smoothing, which introduces excessive noise.

This method to determine the optimal bandwidth can work if the shower components reassemble noisiness in the data. However, if the showers make up a much larger component, the major established showers will need to be removed during a preprocessing step to adequately estimate the underlying sporadic distribution.

|

Fig. 1 Histogram of observed distribution of EFN orbital parameters used to calculate DSH, D′, and DH with curves denoting the PDF estimated by a KDE with various bandwidth values. |

|

Fig. 2 Histograms of observed distribution of EFN geocentric parameters used to calculate DN with curves denoting the PDF estimated by a KDE with various bandwidth values. |

3.2 False positive estimate

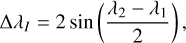

The resulting false positive percentage rate given different bandwidth parameters for the KDEs is seen in Fig. 3. For each similarity discriminant the limiting value necessary to significantly decrease the false positive rate varies widely as the ranges of possible values are not identical. For example, DN achieves a false positive rate of less than 5% with a D-criterion value of ~0.15 or less, whereas the D′ discriminant achieves similar levels of false positives when the limit is less than 0.05 (i.e., if using D′ their criteria must be set much lower). The DH discriminant attains a false positive rate of <5% around a limit of 0.1, and the classical DSH requires a limit of roughly 0.07 (depending on the bandwidth of the KDE used). However, these criteria and false positive rates will vary depending on the fireball or meteor dataset being examined.

If we calculate all the D-values for the EFN dataset and subtract the number of false positive estimates from the KDE analysis, we obtain the minimal estimates for the number of “true shower associations” (Fig. 4). This estimate of the number of “true shower associations” is meant to signify the number of fireballs that meet the D-criterion and are not spurious associations. The y-axis in this plot thus represents the estimate of true shower matches according to our KDE-produced sporadic meteor PDF. Interestingly, despite the level of false positive differences between the similarity discriminants, as the limit decreases, all four D-values converge toward ~ 150–200 shower-associated fireballs. This gives us confidence that the values we estimate are generalizable to any scalar similarity discriminant method. This also indicates that the level of established shower-associated fireballs within the EFN dataset is somewhere around 150–200 fireballs. Considering the size of their 2017–2018 dataset, this translates to approximately 18–25% of the dataset. This does not account for minor showers on the large working list of the IAU MDC; many of these showers may turn out to be not real, and thus we did not consider them.

In the studies by Moorhead (2016) and Sugar et al. (2017), they used DBSCAN for the explicit clustering of data into distinct groups of meteor showers, while the KDE was employed to estimate the underlying PDF, which can be used to infer about the sporadic background and the likelihood of false positives in meteor shower identification. DBSCAN provides a more categorical interpretation (cluster vs. outlier), whereas KDE offers a probabilistic view of the data’s distribution. A KDE may provide a more nuanced understanding of the data distribution, especially in cases where the boundaries between clusters are not clear-cut, but it requires careful selection of the bandwidth parameter. DBSCAN, on the other hand, is more straightforward in identifying dense regions, but is sensitive to its core parameters. In summary, while DBSCAN is more focused on clustering and classifying individual data points into groups, KDE is used to estimate the overall distribution characteristics of the data, which is particularly useful in assessing the likelihood of false positives in meteor shower identification. Both of these methods have their unique advantages and can be complementary, depending on the specific objectives of the analysis.

Finally, it is important to note that this shower estimate is statistical in nature. A low D-value is important, as demonstrated by the decreasing false positive level as the limit decreases in Fig. 3. However, the meteors that meet the limiting requirement, irregardless of how low, could be spurious. For example, we estimate that between 6–11% of the fireballs with a DN < 0.2 are false positives, but the false positives do not necessarily have to have the largest values in the subset. This is important to consider, especially when applying these discriminants to individual fireballs and drawing large conclusions based on similarities with showers or small-bodies.

|

Fig. 3 Percentage of false positives found for DSH, D′, DH, and DN for various bandwidth values and D-value limits. |

|

Fig. 4 Estimate of the number of true showers associations for the EFN dataset, i.e., the number of fireballs that have D-values below the nominal limit for the observed dataset minus the estimated number of false positives for the given limit. |

4 Conclusions

KDE, supplemented by rigorous data normalization techniques, provides a robust and flexible framework for estimating the sporadic background of meteor observations when the sporadic background is more significant than the meteor shower component. This approach allows for a more accurate assessment of meteor shower false positives, addressing the limitations inherent in traditional methods that rely heavily on the D-criterion. Our findings demonstrate that the optimal bandwidth, determined via cross-validation and grid search methods, plays a crucial role in achieving a balance between over- and under-smoothing of the data and obtaining an accurate false positive estimate.

Furthermore, our research underscores the importance of considering the statistical properties of meteoroid data, particularly the implications of sample size and observational uncertainties. By adopting a more nuanced and statistically sound methodology, we can enhance our understanding of meteor shower associations and their parent bodies. The generalizability of our approach, combined with its adaptability to different datasets and parameter sets can be used to create better statistics of near-Earth meteoroid populations.

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement no. 945298 ParisRegionFP.

References

- Borovička, J., Spurn?, P., Shrben?, L., et al. 2022, A&A, 667, A157 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Drummond, J. 1980, in Southwest Regional Conference for Astronomy and Astrophysics, 5, 83 [NASA ADS] [Google Scholar]

- Drummond, J. D. 1981, Icarus, 45, 545 [NASA ADS] [CrossRef] [Google Scholar]

- Egal, A., Gural, P., Vaubaillon, J., Colas, F., & Thuillot, W. 2017, Icarus, 294, 43 [NASA ADS] [CrossRef] [Google Scholar]

- Glantz, S. A., Slinker, B. K., & Neilands, T. B. 2001, Primer of Applied Regression & Analysis of Variance (New York: McGraw-Hill, Inc.) [Google Scholar]

- Hastie, T., Tibshirani, R., Friedman, J. H., & Friedman, J. H. 2009, The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Springer) [Google Scholar]

- Jenniskens, P., Jopek, T. J., Rendtel, J., et al. 2009, WGN, J. Int. Meteor Organ., 37, 19 [NASA ADS] [Google Scholar]

- Jenniskens, P., Nénon, Q., Albers, J., et al. 2016, Icarus, 266, 331 [NASA ADS] [CrossRef] [Google Scholar]

- Jopek, T. J. 1993, Icarus, 106, 603 [NASA ADS] [CrossRef] [Google Scholar]

- Jopek, T., & Froeschlé, C. 1997, A&A, 320, 631 [NASA ADS] [Google Scholar]

- Kornoš, L., Matlovic, P., Rudawska, R., et al. 2014, The Meteoroids 2013, Proc. Astron. Conf., eds. T. J. Jopek, F. J. M. Rietmeijer, J. Watanabe, & I. P. Williams, (A.M. University Press), 225 [Google Scholar]

- Koten, P., Vaubaillon, J., Capek, D., et al. 2014, Icarus, 239, 244 [NASA ADS] [CrossRef] [Google Scholar]

- Moorhead, A. V. 2016, MNRAS, 455, 4329 [CrossRef] [Google Scholar]

- Pauls, A., & Gladman, B. 2005, Meteor. Planet. Sci., 40, 1241 [NASA ADS] [CrossRef] [Google Scholar]

- Pedregosa, F., Varoquaux, G., Gramfort, A., et al. 2011, J. Mach. Learn. Res., 12, 2825 [Google Scholar]

- Rudawska, R., Matlovic, P., Tóth, J., & Kornoš, L. 2015, Planet. Space Sci., 118, 38 [NASA ADS] [CrossRef] [Google Scholar]

- Seaman, D. E., & Powell, R. A. 1996, Ecology, 77, 2075 [NASA ADS] [CrossRef] [Google Scholar]

- Silverman, B. W. 2018, Density Estimation for Statistics and Data Analysis (Routledge) [CrossRef] [Google Scholar]

- Southworth, R., & Hawkins, G. 1963, Smithsonian Contrib. Astrophys. 7, 261 [Google Scholar]

- Sugar, G., Moorhead, A., Brown, P., & Cooke, W. 2017, Meteor. Planet. Sci., 52, 1048 [NASA ADS] [CrossRef] [Google Scholar]

- Valsecchi, G., Jopek, T., & Froeschlé, C. 1999, MNRAS, 304, 743 [NASA ADS] [CrossRef] [Google Scholar]

- Vida, D., Brown, P. G., & Campbell-Brown, M. 2017, Icarus, 296, 197 [NASA ADS] [CrossRef] [Google Scholar]

- Vida, D., Brown, P. G., & Campbell-Brown, M. 2018, MNRAS, 479, 4307 [NASA ADS] [CrossRef] [Google Scholar]

All Figures

|

Fig. 1 Histogram of observed distribution of EFN orbital parameters used to calculate DSH, D′, and DH with curves denoting the PDF estimated by a KDE with various bandwidth values. |

| In the text | |

|

Fig. 2 Histograms of observed distribution of EFN geocentric parameters used to calculate DN with curves denoting the PDF estimated by a KDE with various bandwidth values. |

| In the text | |

|

Fig. 3 Percentage of false positives found for DSH, D′, DH, and DN for various bandwidth values and D-value limits. |

| In the text | |

|

Fig. 4 Estimate of the number of true showers associations for the EFN dataset, i.e., the number of fireballs that have D-values below the nominal limit for the observed dataset minus the estimated number of false positives for the given limit. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.

![$\Theta = \arccos \left[ {\sin \left( {\beta _{\rm{B}}^\prime } \right)\sin \left( {\beta _{\rm{A}}^\prime } \right) + \cos \left( {\beta _{\rm{B}}^\prime } \right)\cos \left( {\beta _{\rm{A}}^\prime } \right)\cos \left( {h_{\rm{B}}^\prime - h_{\rm{A}}^\prime } \right)} \right],$](/articles/aa/full_html/2024/06/aa49024-23/aa49024-23-eq13.png)