| Issue |

A&A

Volume 559, November 2013

|

|

|---|---|---|

| Article Number | A73 | |

| Number of page(s) | 11 | |

| Section | Astronomical instrumentation | |

| DOI | https://doi.org/10.1051/0004-6361/201321079 | |

| Published online | 18 November 2013 | |

Optimal arrays for compressed sensing in snapshot-mode radio interferometry

Stanford University,

450 Serra Mall,

Stanford,

CA

94305,

USA

e-mail:

clarafj@stanford.edu

Received:

11

January

2013

Accepted:

19

June

2013

Context. Radio interferometry has always faced the problem of incomplete sampling of the Fourier plane. A possible remedy can be found in the promising new theory of compressed sensing (CS), which allows for the accurate recovery of sparse signals from sub-Nyquist sampling given certain measurement conditions.

Aims. We provide an introductory assessment of optimal arrays for CS in snapshot-mode radio interferometry, using orthogonal matching pursuit (OMP), a widely used CS recovery algorithm similar in some respects to CLEAN. We focus on comparing centrally condensed (specifically, Gaussian) arrays to uniform arrays, and randomized arrays to deterministic arrays such as the VLA.

Methods. The theory of CS is grounded in a) sparse representation of signals and b) measurement matrices of low coherence. We calculate the mutual coherence of measurement matrices as a theoretical indicator of arrays’ suitability for OMP, based on the recovery error bounds in Donoho et al. (2006, IEEE Trans. Inform. Theory, 52, 1289). OMP reconstructions of both point and extended objects are also run from simulated incomplete data. Optimal arrays are considered for objects represented in 1) the natural pixel basis and 2) the block discrete cosine transform (BDCT).

Results. We find that reconstructions of the pixel representation perform best with the uniform random array, while reconstructions of the BDCT representation perform best with normal random arrays. Slight randomization to the VLA also improves it dramatically for CS recovery with the pixel basis.

Conclusions. In the pixel basis, array design for CS reflects known principles of array design for small numbers of antennas, namely of randomness and uniform distribution. Differing results with the BDCT, however, emphasize the need to study how sparsifying bases affect array design before CS can be optimized for radio interferometry.

Key words: instrumentation: interferometers / methods: numerical / techniques: image processing / radio continuum: general

© ESO, 2013

1. Introduction

Interferometry is the definitive imaging tool of radio astronomy, probing high-resolution structures by using an array of many antennas to emulate a single lens whose aperture is the greatest distance between a pair of antennas (Thompson et al. 2001). By measuring the visibility, or the interference fringes of the radio signal at every pair of antennas, an array samples the two-dimensional Fourier transform of the spatial intensity distribution of the source. Ideally, if we thoroughly sample the Fourier, or u-v plane, we can then invert the transform to reconstruct the object. The vast amount of data required has motivated a new generation of ambitious interferometers, including the Atacama Large Millimetre/submillimetre Array (ALMA) and the Square Kilometre Array (SKA), which will use several thousand antennas. Meanwhile, smaller interferometers often sample over a period of time, allowing the rotation of the earth to naturally produce new baselines.

Despite such measures, there are always irregular holes on the u-v plane where sampling of the visibility function is thin or simply nonexistent. This data deficiency is currently managed by interpolating or filling in zeros for unknown visibility values, and applying deconvolution algorithms such as CLEAN and its variants (Högbom 1974; Clark 1980) to the resulting dirty images. However, it may not be necessary to collect the extensive data set in the first place.

Among signal processing’s most promising developments in recent years is the theory of compressed sensing (CS), which has shown that the information of a signal can be recovered even when sampling does not fulfill the fundamental Nyquist rate (Donoho 2006; Candès et al. 2006a,b). The theory revolves around a priori knowledge that the signal is sparse or compressible in some basis, in which case its information naturally resides in a relatively small number of coefficients. Instead of directly sampling the signal, whereby full sampling would be inevitable in locating every non-zero or significant coefficient, CS allows us to compute just a few inner products of the signal along selected measurement vectors of certain favorable characteristics. The novelty of CS is that it takes advantage of signal compressibility to alleviate expensive data acquisition, not just expensive data storage.

It is well established that objects typical of astronomic study are sparse or compressible (Polygiannakis 2003; Dollet 2004); indeed, they are often sparse in the natural pixel basis. Recent studies have recognized this innate agreeability with CS, examining its potential in radio interferometry compared to traditional deconvolution methods (Wiaux et al. 2009; Li et al. 2011) as well as methods for applying it to wide field-of-view interferometry (McEwen & Wiaux 2011). As in traditional interferometry, however, reliable imaging will depend heavily on the sample distribution the array produces on the u-v plane. CS involves unusual premises for sampling, e.g., that we actively purpose to undersample, and rethinking array design to complement these premises is a critical step before CS can be applied in practice.

In this paper, we survey idealized arrays that optimize the performance of a CS recovery algorithm known as orthogonal matching pursuit (OMP; Davis et al. 1997; Pati et al. 1993; Tropp & Gilbert 2007) for snapshot-mode observations when objects are sparsely represented 1) in the pixel basis and 2) by the block discrete cosine transform (BDCT). We focus on how arrays affect measurement matrix incoherence, a popular parameter in CS performance guarantees, and also simulate reconstructions of both point and extended objects. Among the tested arrays, we find that with the pixel basis a uniform random distribution of antennas performs best, while with the BDCT a normal random distribution performs best. (Initial results with the pixel basis were also briefly summarized in Fannjiang 2011.) We also study the benefits of slight perturbation to the VLA’s patterned “Y” array, as well as the unexpected inability of measurement matrix incoherence to predict OMP reconstruction behavior with the BDCT.

In Sect. 2, we give a brief description of the CS framework as it pertains to radio interferometry and array optimization. The arrays under study are summarized in Sect. 3. Using the pixel basis, Sect. 4 analyzes how these arrays affect the incoherence of the measurement matrix, as well as OMP reconstructions of point and extended objects. Section 5 does the same in under-resolved conditions. Section 6 adopts the BDCT instead of the pixel basis to sparsely represent objects, also examining measurement matrix incoherence and extended object reconstructions. The conclusions are drawn in Sect. 7.

2. Compressed sensing

2.1. Overview

Consider a signal , which has a sparse representation s with the columns of an N × N basis matrix Ψ, such that x = Ψs. Given that s is S-sparse, meaning it has only S ≪ N non-zero components (or, more generally, given that s is S-compressible and has only S ≪ N significant components) and given measurement vectors of certain desirable characteristics, CS proposes that we should not have to take the complete set of N inner-product measurements to recover the signal. If Θ is an M × N matrix whose M < N rows are the measurement vectors, then CS aims to invert the underdetermined linear system y = ΘΨs = Φs, where y is a vector of the M < N measurements of x and we call Φ the measurement matrix.

If s is sparse, it follows that we seek a sparse solution to the inverse problem. Indeed, in the absence of noise s is the sparsest solution, i.e., subject to with the least number of non-zero components. A direct search for this solution, however, is computationally intractable. Given Φ of favorable characteristics (described below), an ℓ1-minimization problem is often solved instead, such that we search for the solution with the smallest ℓ1-norm, or sum of the absolute values of the coefficients. CS recovery schemes also include greedy methods such as OMP, which we use in this paper. Faster than basis pursuit (BP; Chen et al. 1992) and LASSO (Tibshirani 1996), the classic ℓ1-minimization methods, OMP was also chosen for its parallels to CLEAN: both iteratively select point sources in a greedy fashion until the residual falls below some stopping threshold. The one difference is that OMP calculates each new residual by subtracting an orthogonal projection of the data onto the span of all columns of Φ selected so far, rather than simply subtracting the visibility of the point source selected in the current iteration (see Sect. 2.3, and Pati et al. 1993; Davis et al. 1997 for a rigorous presentation).

Recovering x requires that the measurement matrix

Φ = ΘΨ does not corrupt or lose key features of

s in mapping higher-dimension

s to the lower-dimension y.

An intuitive ideal is that Φ should nearly preserve the Euclidean norm of

s, which means that every possible subset of

S columns of Φ should act close to an orthonormal system.

A rigorous formulation of such a quality is known as the restricted isometry property

(RIP; Candès & Tao 2005), but it cannot be

verified empirically; checking every S-combination of columns of

Φ for near-orthogonality is a combinatorial process and

NP-hard. An alternative metric that we use is mutual coherence (MC),

which can guarantee the RIP (a relationship derived in Davenport et al. 2012, from results in Gers˘gorin 1931) and is well-suited for numerical experimentation. MC gives the

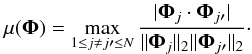

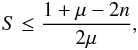

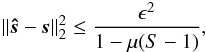

maximum correlation between any pair of columns of Φ:  (1)An

incoherent matrix with a near-zero MC is desired. Error bounds for the performance of the

mainstream CS recovery processes, including BP (Donoho et al. 2006), LASSO (Candès & Plan 2009), and OMP (Donoho et al. 2006) have

been developed based on MC. For OMP, suppose the sparsity S of

s satisfies

(1)An

incoherent matrix with a near-zero MC is desired. Error bounds for the performance of the

mainstream CS recovery processes, including BP (Donoho et al. 2006), LASSO (Candès & Plan 2009), and OMP (Donoho et al. 2006) have

been developed based on MC. For OMP, suppose the sparsity S of

s satisfies  (2)where

n is the ratio of ϵ, the norm of the noise in the

measurements, to the absolute value of the least non-zero component of

s. Then OMP is guaranteed to find the solution such that

(2)where

n is the ratio of ϵ, the norm of the noise in the

measurements, to the absolute value of the least non-zero component of

s. Then OMP is guaranteed to find the solution such that

(3)where

supp gives the support of its argument, i.e., the indices of the

non-zero components, and

(3)where

supp gives the support of its argument, i.e., the indices of the

non-zero components, and  (4)as

proven by Donoho et al. (2006). These bounds use

ϵ as the stopping criterion for OMP, i.e., the recovery process stops

when where is the reconstruction of I. An important note is

that these error bounds were developed to encompass all possible Φ and

s combinations, regardless of how ill-suited they are

for CS. In practice, when the measurement scheme and object sparsity are tailored for CS

application, OMP outperforms these bounds dramatically. In this paper, we thus refer to

these bounds as a general representation of the dependence of CS algorithms on measurement

matrix incoherence, rather than as a strict prediction of error in reconstruction.

(4)as

proven by Donoho et al. (2006). These bounds use

ϵ as the stopping criterion for OMP, i.e., the recovery process stops

when where is the reconstruction of I. An important note is

that these error bounds were developed to encompass all possible Φ and

s combinations, regardless of how ill-suited they are

for CS. In practice, when the measurement scheme and object sparsity are tailored for CS

application, OMP outperforms these bounds dramatically. In this paper, we thus refer to

these bounds as a general representation of the dependence of CS algorithms on measurement

matrix incoherence, rather than as a strict prediction of error in reconstruction.

2.2. Application to interferometric array optimization

The van Cittert-Zernike theorem gives the visibility function, as measured by two point

antennas, over the viewing window as  (5)where

I(p) is the intensity of the radiation

from angular direction p, and

b is the baseline, or displacement between the antennas,

projected onto the plane orthogonal to the source propagation and divided by the

wavelength observed. Assuming a small field of view, such that the object

I lives on the plane instead of the sphere, the visibility measurements

can be approximated as

(5)where

I(p) is the intensity of the radiation

from angular direction p, and

b is the baseline, or displacement between the antennas,

projected onto the plane orthogonal to the source propagation and divided by the

wavelength observed. Assuming a small field of view, such that the object

I lives on the plane instead of the sphere, the visibility measurements

can be approximated as  (6)In

other words, Θ is a “partial” two-dimensional Fourier transform, where the

array’s baselines b dictate which Fourier coefficients are

captured. Since we capture a Fourier coefficient with every baseline, or pair of antennas,

an array with a antennas yields

(6)In

other words, Θ is a “partial” two-dimensional Fourier transform, where the

array’s baselines b dictate which Fourier coefficients are

captured. Since we capture a Fourier coefficient with every baseline, or pair of antennas,

an array with a antennas yields  measurements in a snapshot. (Because an array cannot sample the entire u-v

plane, neither Θ nor V are properly

the Fourier transform matrix or the Fourier transform of I,

respectively. Unmeasured Fourier coefficients are absent in

V, and the corresponding rows are likewise absent in

Θ.)

measurements in a snapshot. (Because an array cannot sample the entire u-v

plane, neither Θ nor V are properly

the Fourier transform matrix or the Fourier transform of I,

respectively. Unmeasured Fourier coefficients are absent in

V, and the corresponding rows are likewise absent in

Θ.)

Due to the role of the baselines in selecting the rows of Θ, the array is critical in designing an incoherent Φ. In the well-sampled case, the general consensus is that a centrally condensed or bell-shaped distribution of baselines produces more favorable near-in and far side-lobe patterns, and thus superior images, than a uniform distribution of baselines (Boone 2002; Holdaway 1996; 1997; Kogan 1997; Woody 2001a,b). Such distributions pursue an ideal clean beam that is both highly localized and free of lobes, e.g., a Gaussian. Array optimization depends on the imaging objectives in mind, however, and as we intend to undersample in a way that facilitates CS, minimizing MC is our objective. Incidentally, the calculation of MC in Eq. (1) is synonymous to that of an array’s peak side-lobe when Ψ is the pixel basis, and can be interpreted similarly.

Early in the development of CS it was shown that a matrix built by uniformly and randomly selecting rows from a discrete Fourier transform obeys the RIP. Thus, objects can be stably recovered from partial, uniform random Fourier measurements through ℓ1-norm minimization (Candès et al. 2006a,b), the mainstream method for CS recovery. This fundamental result suggests that an array with a uniform random distribution of baselines should work well with CS when the pixel basis is used. In fact, (Candès et al. 2006a) noted that their results should be of particular interest to interferometric imaging in astronomy.

Object sparsity is the other key factor. A basis matrix Ψ that results in a more coherent measurement matrix Φ may compensate by providing a far sparser representation of the object than the natural pixel basis. In addition to the pixel basis, where Ψ is simply the identity, we also consider the two-dimensional block discrete cosine transform (BDCT). Used extensively in image compression, the BDCT divides an image into blocks and decomposes each into a sum of cosines of different frequencies. In both bases we focus on the suitability of random and randomized arrays, as random sampling has often proven to be inseparable from CS theory. We compare their performances with that of the “Y”-shaped configuration currently used by the Very Large Array (VLA). To study the most basic behavior of arrays, we focus on snapshot-mode observations and do not involve Earth rotation.

2.3. Orthogonal matching pursuit

OMP is a variant of the matching pursuit (MP) process, which searches for the sparsest reconstruction subject to ΦI = V by iteratively projecting the data V onto greedily chosen columns of the measurement matrix Φ until a “best match” to the object I is found. We start with the assumption that the original object is S-sparse (S is unknown), and that the data is therefore a linear combination of S columns, not all N columns, of Φ. The goal is to find which subset of S columns participate, as we have no idea which S components of are non-zero. By finding these S columns, OMP can compute z, a vector of the non-zero components of the reconstruction .

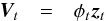

We first initialize the residual

r0 = V, the set

of chosen column indices α0 = ∅, and the matrix of chosen

columns φ0 to the empty matrix. At iteration t

OMP finds the column of Φ that has the highest coherence with the

residual, as this column likely contributes the most to the remaining data:  (7)where

Φk is the kth column of

Φ. This column should represent the brightest remaining pixel in the object

I. We then add

kt to the set of chosen column indices, and

add Φkt to the matrix

of chosen columns.

(7)where

Φk is the kth column of

Φ. This column should represent the brightest remaining pixel in the object

I. We then add

kt to the set of chosen column indices, and

add Φkt to the matrix

of chosen columns.  (8)

(8)![\begin{eqnarray} \phi_t &=& [ \phi_{t - 1} \quad \boldsymbol{\Phi}_{k_t}]. \end{eqnarray}](/articles/aa/full_html/2013/11/aa21079-13/aa21079-13-eq61.png) (9)We

can then solve the least-squares problem to update

(9)We

can then solve the least-squares problem to update  (10)and

use it to update the data and the residual.

(10)and

use it to update the data and the residual.

(11)

(11) (12)OMP

then returns to Eq. (7) and iterates unless

| |rt||2 ≤ δ||V||2,

where δ||V||2 is the

stopping criterion (here, δ = 0.01 due to the noise parameters described

in Sect. 4). In essence, at each iteration OMP

greedily choses a column from Φ, and calculates a new residual by subtracting

away from the data its orthogonal projection onto the span of all the columns chosen so

far. Ideally, each iteration locates the brightest remaining pixel in the object

I, and removes the collective contribution from all the

pixels chosen so far from the data. Let

(12)OMP

then returns to Eq. (7) and iterates unless

| |rt||2 ≤ δ||V||2,

where δ||V||2 is the

stopping criterion (here, δ = 0.01 due to the noise parameters described

in Sect. 4). In essence, at each iteration OMP

greedily choses a column from Φ, and calculates a new residual by subtracting

away from the data its orthogonal projection onto the span of all the columns chosen so

far. Ideally, each iteration locates the brightest remaining pixel in the object

I, and removes the collective contribution from all the

pixels chosen so far from the data. Let  and denote the final outputs of OMP. Then the reconstruction has non-zero components only

at the indices in

and denote the final outputs of OMP. Then the reconstruction has non-zero components only

at the indices in  ,

where its non-zero component at km is the

mth component of . Here we use the most generic form of OMP, which

obeys the process above with no other constraints on the reconstruction (in particular, we

did not enforce non-negativity).

,

where its non-zero component at km is the

mth component of . Here we use the most generic form of OMP, which

obeys the process above with no other constraints on the reconstruction (in particular, we

did not enforce non-negativity).

As formally shown in (Lannes et al. 1997), the traditional deconvolution algorithm CLEAN is a non-orthogonal MP process. Each iteration greedily chooses the brightest remaining pixel in the image and removes its contribution to the data, until the residual falls below some threshold. The critical difference between OMP and non-orthogonal MPs like CLEAN is Eq. (10): CLEAN does not project the data onto all the columns chosen so far, which enables OMP to flexibly update the components of z on all past chosen columns at every iteration. The basic CLEAN simply removes the component of the data on the column chosen in the current iteration. This difference makes OMP the superior recovery algorithm: when the dimension of z is large, the orthogonal projection enables the residual to converge to 0 far more quickly. Unlike MP, OMP is also guaranteed to converge in a finite number of iterations in finite-dimensional spaces (Davis et al. 1994; 1997). OMP and its many variants are widely used in CS, and their structural closeness to CLEAN is part of the natural suitability of CS for radio interferometry.

3. Arrays

The arrays under study are the uniform random array (URA); the truncated normal random array (NRA), where sampling coverage beyond the predetermined aperture is removed; an array defined by the Hammersley point set, a low-discrepancy sequence widely used in quasi-Monte Carlo methods (Neiderreiter 1987); and a VLA-based “Y” configuration. (In “Y” arrays other than of 27 antennas, the distances between antennas along an arm are based on the current ratios of distances in the VLA). We also create a modification of the “Y” array, called YOPP (“Y”, Outermost antennas Perpendicularly Perturbed), in which the three outermost antennas on each arm of the “Y” are perturbed a random distance in the direction perpendicular to their respective arm. The maximum perturbation distance is set to 30% of the mean distance between adjacent antennas on an arm (see Fig. 4). In arrays other than of 27 antennas, the outermost third of the antennas on each arm are perturbed this way.

The standard deviation (SD) of an NRA describes the continuous normal distribution sampled by the antenna locations. It is not the standard deviation of the actual discrete distribution of antennas. Also note that the baseline distribution, not the antenna distribution, determines where the u-v plane is sampled. In particular, the URA does not correspond to a uniform random distribution of baselines.

|

Fig. 1 Antenna distribution of a URA (top) and its baseline distribution (bottom). |

|

Fig. 2 Antennas distributed according to the Hammersley point set (top) and the corresponding baseline distribution (bottom). |

|

Fig. 3 Antenna distribution of an NRA with SD = 0.18 (top) and its baseline distribution (bottom). |

|

Fig. 4 Antenna distribution of the modified array “YOPP” (top) and its baseline distribution (bottom), compared to the antenna and baseline distribution of the VLA-based “Y” array. Original versions of these figures appeared in Fannjiang (2011). |

4. Pixel basis in the well-resolved case

We compute the MC of measurement matrices as the number of antennas increases in each

array. For the randomized arrays (URA, NRA, and YOPP), the MC plotted is the mean of 50

independent instances of the array. We also run OMP reconstructions on objects of increasing

sparsity, which are 60 × 60 grids with random point sources and Gaussian white noise. The

noise has a standard deviation of  ,

where M is the dimension of V. For the

randomized arrays, the reconstruction rate plotted is the mean of 5 independent instances of

the array, on 200 independent trials for each level of object sparsity. To provide a direct

comparison to the existing VLA configuration, arrays with 27 antennas are used in the

reconstructions. Correspondingly, the measurement matrices in the MC computations have the

dimensions 351 × 3600, where

,

where M is the dimension of V. For the

randomized arrays, the reconstruction rate plotted is the mean of 5 independent instances of

the array, on 200 independent trials for each level of object sparsity. To provide a direct

comparison to the existing VLA configuration, arrays with 27 antennas are used in the

reconstructions. Correspondingly, the measurement matrices in the MC computations have the

dimensions 351 × 3600, where  . In

the reconstructions of random point sources, a reconstruction is deemed successful if the

relative error (RE) from the original object I is less than or

equal to 0.01, where the RE is defined as

. In

the reconstructions of random point sources, a reconstruction is deemed successful if the

relative error (RE) from the original object I is less than or

equal to 0.01, where the RE is defined as

(13)

(13)

4.1. Mutual coherence and random point sources

In Fig. 5, the URA provides the most incoherent measurement matrices, suggesting its suitability for OMP. It is also the only array whose MC appears to approach zero as the number of antennas grows large – an important detail, as in theory it implies that OMP reconstructions can be improved indefinitely by adding more antennas to the array. The particular normal random array (NRA) shown does not reveal how MC varies by SD (see Sect. 4.2); here, with SD = 0.14, the array provides highly coherent measurement matrices. The “Y” array also gives a higher MC than the uniform random array, as its distinct patterns likely strengthen correlations between the columns of Φ. Similarly, the Hammersley array is generated by a deterministic formula, and the resulting correlations in the measurement information probably cause its poor performance. The MC curves of both these arrays also fluctuate, rather than monotonically decrease: with such rigid patterns, even the slightest deviations in the relationships between antennas can throw off trends in the MC.

The randomized modification YOPP performs nearly identically to the URA when the number of antennas is not too high, e.g. with 27 antennas. This has exciting implications for the VLA and other patterned arrays – with slight random perturbation to just 9 antennas, the MC is significantly decreased, tailoring it for CS application. The benefits do not appear to last as the number of antennas grows large, but for a moderate number it is a surprisingly effective improvement.

Corresponding to the MC results, the OMP reconstruction performances of the URA and YOPP with 27 antennas are nearly identical. Both attain the highest “threshold” sparsity, or maximum object sparsity with 100% reconstruction success – about 200 point sources – and are clearly superior to the “Y” array and NRA, which have threshold sparsities of about 100 and 125, respectively. The high MC of the Hammersley array causes its reconstruction performance to decay rapidly with only a handful of point sources.

|

Fig. 5 Mutual coherence of measurement matrices as the number of antennas in the array increases (top) and rate of successful OMP reconstructions of random point sources with arrays of 27 antennas (bottom). For the randomized arrays (URA, NRA, and YOPP), the MC plotted is the mean of 50 independent instances of the array. The reconstruction rate plotted is the mean of 5 independent instances of the array, on 200 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. Original versions of these figures appeared in Fannjiang (2011). |

4.2. Uniform and normal random arrays

This section examines the spectrum from centrally condensed to uniform baseline distributions, which has been a focus of array design in traditional interferometry. Specifically, we look at normal and uniform random arrays of antennas. As the SD of an NRA decreases and the array becomes more concentrated, the measurement matrices grow more coherent; conversely, as the arrays become less concentrated, the MC decreases and approaches that of the URA. The OMP reconstruction results largely follow these trends; however, there are two interesting differences. Though the MC curves decrease at a roughly linear rate as the SD of the array increases, the reconstruction probability curves do not appear to increase in any clear mathematical relationship to the MC results, as the relationships in (Donoho et al. 2006) would suggest.

Secondly, though an NRA cannot better the reconstruction performance of a URA, it attains the same threshold sparsity with SD ≥ 0.16. However, the measurement matrix of an NRA with an SD of 0.16 is still much more coherent than that of a URA. These discrepancies between the two experiments, though subtle, indicate the presence of factors at play other than MC, an observation that becomes more relevant with the BDCT in Sect. 6.

We also emphasize that while the NRA may be able to imitate the URA with 27 antennas, its MC behavior still differs fundamentally as it fails to continue approaching zero as the number of antennas grows large.

|

Fig. 6 Mutual coherence of measurement matrices as the number of antennas in the array increases (top) and rate of successful OMP reconstructions of random point sources (bottom) as the standard deviation (SD) of the normal random array is increased. The MC plotted is the mean of 50 independent instances of each array. The reconstruction rate plotted is the mean of 5 independent instances of each array, on 200 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. |

4.3. Extended object reconstructions

The probability curves in the previous subsections are based on reconstructions of random point sources. Here we broaden those results to reconstructions of a more realistic extended source, the black hole system 3C75. A 120 × 120-pixel image is used as the object, where all pixel brightness values are normalized by the maximum brightness value to lie on [0, 1]. Snapshot-mode measurements are simulated from 100 antennas. Again, the URA produces an exceptionally accurate reconstruction of the object in Fig. 7b. The performances of the two NRAs reflect the nuances in antenna concentration seen in Fig. 6: though an array with SD = 0.14 produces a distinct checkerboard pattern of missed pixels, increasing the SD to just 0.18 erases these artifacts and greatly improves the accuracy of the reconstruction. Visually speaking, its reconstruction appears comparable to the URA’s benchmark.

The checkerboard artifact displayed by an NRA with SD = 0.14 is an interesting phenomenon that can be linked to high MC when pixels of non-zero intensity are adjacent (rather than randomly scattered, as in the previous subsections). Adjacent columns of Φ, corresponding to the measurement information of adjacent pixels, naturally tend to be more coherent than columns that are far apart. Thus, OMP is more likely to misinterpret which of two given columns contributed most to the data (equivalently, which of the two pixels is brighter in the object) if they are adjacent. This mishandling of adjacent pixels is more prone to occur if Φ has a high MC, and could thus cause the checkerboard pattern as well as the striped artifacts produced by the “Y” array. The slight randomization in the YOPP array remedies these artifacts enormously, again proving to be a simple yet effective modification. Though the noise-like structures to the left do display faint checkerboard artifacts, the main body of the galaxy is reconstructed accurately and the relative error (RE) is comparable to that of an NRA with SD = 0.18.

The point and extended source reconstructions both confirm the URA as the ideal array for CS with the pixel basis, notably over patterned arrays like the VLA. This finding counters that of Wenger et al. (2010), the only other study on interferometric arrays for CS to our knowledge, which found that a uniform random baseline distribution (URB) performs significantly worse than the patterned VLA baseline distribution. However, a measurement matrix Φ generated with a URB is precisely the matrix of uniform, random Fourier measurement vectors proven in Candès et al. (2006a,b) to obey conditions guaranteeing stable CS recovery. Though we used a URA, whose baselines do not strictly follow a uniform random distribution, the underlying principles of uniformity and randomness make our results much more consistent with the established theorem. Random, as opposed to deterministic, sampling has always been fundamental to CS theory.

|

Fig. 7 OMP reconstructions with the pixel basis of the object a), radio emission from 3C75, simulated with measurements from a URA in b) (RE = 1.69 × 10-4), an NRA of SD = 0.14 in c) (RE = 0.763), an NRA of SD = 0.18 in d) (RE = 0.0150), the VLA-based “Y” array in e) (RE = 0.179), and the YOPP array in f) (RE = 0.0187). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/29 (courtesy of NRAO/AUI and F.N. Owen, C.P. O’Dea, M. Inoue, and J. Eilek). Original versions of a)–c), e), and f) appeared in Fannjiang (2011). |

|

Fig. 8 Mutual coherence of measurement matrices by aperture size (top) and rate of successful OMP reconstructions of random point sources (bottom) in the under-resolved case. Both experiments are with arrays of 27 antennas. In the top panel, the x-axis gives the ratio of the aperture size in the under-resolved case to the aperture size in the well-resolved case. In the bottom panel, this relative aperture size is 0.5. For the randomized arrays (URA, NRA, and YOPP), the MC plotted is the mean of 50 independent instances of the array. The reconstruction rate plotted is the mean of 5 independent instances of the array, on 500 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. Original versions of these figures appeared in Fannjiang (2011). |

5. Super-resolution with the pixel basis

Here we numerically test the suitability of arrays for CS in the under-resolved case. The

diffraction limit is broken by decreasing the aperture of the array such that

, where A is the

aperture, R is the angular resolution, and λ is the signal

wavelength. R and λ are kept constant. All other

experimental parameters follow those described in Sect. 4.

, where A is the

aperture, R is the angular resolution, and λ is the signal

wavelength. R and λ are kept constant. All other

experimental parameters follow those described in Sect. 4.

5.1. Array comparisons with random point sources

For arrays of 27 antennas, the URA again provides the lowest MC, and by a far greater

margin than in the well-resolved case when  falls

below 0.8. The OMP reconstruction curves, simulated when

falls

below 0.8. The OMP reconstruction curves, simulated when

,

confirm the URA’s capacity for super-resolution of point sources. While it attains a

threshold sparsity of about 125 point sources, no other tested array displays anything

near this potential. The URA’s super-resolving capabilities are likely due to its higher

proportion of large baselines, which results in an increased sensitivity to the

high-frequency Fourier components of an object. The array’s reconstructions of point

sources in Sect. 4 are probably superior for the same

reason.

,

confirm the URA’s capacity for super-resolution of point sources. While it attains a

threshold sparsity of about 125 point sources, no other tested array displays anything

near this potential. The URA’s super-resolving capabilities are likely due to its higher

proportion of large baselines, which results in an increased sensitivity to the

high-frequency Fourier components of an object. The array’s reconstructions of point

sources in Sect. 4 are probably superior for the same

reason.

Since decreasing aperture size has the effect of condensing an array, it is intuitive to reason that a far greater SD than in the well-resolved case is needed to imitate a URA. Similarly, the random perturbation in YOPP does not appear to improve on the “Y” array here. The maximum perturbation distance in YOPP is based on the mean distance between antennas, and this mean distance grows irrelevantly small with smaller apertures. Far greater perturbation, perhaps to an extent that the deterministic “Y” framework is unrecognizable, is probably needed to compensate.

5.2. Extended source reconstructions

OMP reconstructions are run of the radio image of 3C75 in the under-resolved case, setting

. The

advantages of the URA are even more extraordinary here, as the accuracy of its

reconstruction appears unaffected from the well-resolved case. Predictably, the other two

arrays give much weaker performances: the “Y” array barely confirms the galaxy’s

existence, while the NRA’s reconstruction (using an SD that emulates a URA in the

well-resolved case) is marred by artifacts similar to the checkerboard pattern seen in the

well-resolved case.

. The

advantages of the URA are even more extraordinary here, as the accuracy of its

reconstruction appears unaffected from the well-resolved case. Predictably, the other two

arrays give much weaker performances: the “Y” array barely confirms the galaxy’s

existence, while the NRA’s reconstruction (using an SD that emulates a URA in the

well-resolved case) is marred by artifacts similar to the checkerboard pattern seen in the

well-resolved case.

|

Fig. 9 OMP reconstructions with the pixel basis of the object from Fig. 7a, radio emission from 3C75, simulated with measurements from a URA in

a) (RE = 2.89 × 10-4), an NRA of

SD = 0.18 in b) (RE = 5.4617), the

“Y” array in c) (RE = 0.840), and the YOPP array in

d) in the under-resolved case when

|

6. The BDCT

Here the basis matrix Ψ is the BDCT matrix for 4 × 4-pixel transform blocks. Unpublished results with 2 × 2- and 8 × 8-pixel blocks show the same relationships between the arrays.

6.1. Array comparisons through mutual coherence

We calculate the MC of matrices Φ that give measurements for a 32 × 32-pixel object. Figure 10 reveals some of the same trends as in the pixel basis: the URA provides the most incoherent measurement matrices, while the “Y” array gives a much higher MC. The BDCT also seems to have amplified the effects of patterning, as shown in the “Y” array’s erratic MC curve. However, the NRAs have become more congruent with the URA, in that they decay in a like manner as the number of antennas increases. In theory, CS reconstructions could thus be improved in a predictable fashion simply by adding more antennas to either array. This similarity allows an NRA with SD ≥ 0.28 to emulate the URA for arbitrary numbers of antennas, not just up to 27 as in the pixel basis.

Though the basic trend of higher SD resulting in lower MC is also exhibited, what is startling is how OMP reconstructions with the BDCT fail to correspond to the MC results, as seen in the next subsection.

|

Fig. 10 Mutual coherence of measurement matrices as the number of antennas in the array increases, using the BDCT matrix as the basis matrix Ψ. For the randomized arrays (URA, NRA), the MC plotted is the mean of 50 independent instances of the array. |

6.2. Reconstructing extended sources

The OMP extended-source reconstructions in Figs. 11–14 deviate from the MC results in intriguing ways. Most notably, the URA never provides the most accurate reconstruction of the object, despite providing the most incoherent measurement matrices. The URA’s reconstructions in Figs. 11b–14b introduce spurious artifacts to the backgrounds of the galaxies, and also distort structures within the galaxies. The red- and yellow-colored internal structures of Fig. 11a are broadened in Fig. 11b; the central dark blue region of Fig. 12a is highly distorted in Fig. 12b; and the large red structure on the right-hand side of Fig. 13a is much diminished in Fig. 13b. In all cases, an NRA with an SD as low as 0.14 provides the most faithful reconstruction. Reinforcing the contradiction, the measurement matrix produced with this NRA has an MC of 0.28 – more than twice the MC of the URA, 0.12. In the pixel basis the NRA can also emulate a URA, but only when the SD is great enough that the MC of the two arrays is also comparable. Reconstructions (not shown) of the sources NGC 2403, M33, and 3C31 were also run, and demonstrate the same surprising trend: the NRA clearly provides the most faithful reconstructions with the smallest RE, despite giving much higher MC than the URA.

Similarly, despite giving the most coherent measurement matrices by far (see Fig. 10), the “Y” array provides reconstructions with smaller RE than the URA in Figs. 12d and 13d. In Fig. 12d, its reconstruction preserves the central dark blue region of Cassiopeia A much more accurately than the URA, and in Fig. 13d it preserves the large red structure on the right-hand side of M87 that the URA’s reconstruction fails to recover. The “Y” array does suffer from an idiosyncratic distribution of error known as the blocking effect: when too few BDCT components are recovered by OMP, the missing components (typically high-frequency components) in each block create breaks in features that should span the blocks continuously. Despite the blocking effect, however, even when the “Y” array does not outperform the URA (Figs. 11d and 14d) its RE is surprisingly comparable given that it produces far more coherent measurement matrices (RE = 0.0752 vs. the URA’s RE = 0.0633 in Fig. 11, and RE = 0.0137 vs. the URA’s RE = 0.0082 in Fig. 14).

|

Fig. 11 OMP reconstructions of the object a), radio emission from the Crab Nebula, using the BDCT as the sparsifying basis. The original object is 120 × 120 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.0633), an NRA of 0.14 in c) (RE = 0.0378), and the VLA-based “Y” array in d) (RE = 0.0752). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/393 (courtesy of NRAO/AUI and M. Bietenholz). |

|

Fig. 12 OMP reconstructions of the object a), radio emission from Cassiopeia A, using the BDCT as the sparsifying basis. The original object is 200 × 200 pixels and measurements are simulated from 180 antennas. Reconstructions are run with measurements from a URA in b) (0.0434), an NRA of 0.14 in c) (RE = 0.0033), and the VLA-based “Y” array in d) (RE = 0.0119). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/395 (courtesy of NRAO/AUI). |

This discrepancy between the MC results and the extended source reconstructions has several possible roots – first, it is important to note that the calculation of MC is only mathematically synonymous to the calculation of the array’s peak side-lobe in the pixel basis. With the BDCT (or any other transform) incorporated into the measurement matrix, the physical analogy to the peak side-lobe breaks down and the MC cannot be interpreted in the same way. Though it is still theoretically relevant to array optimization through Eq. (4), it no longer has any direct physical meaning to the problem. Many CS sampling studies have focused solely on MC or the RIP – just as many array studies have focused solely on side-lobes – but such indicators may have different implications depending on the sparsifying basis. Though the suitability of sparsifying bases for image reconstruction in radio interferometry was noted as early as in (Starck et al. 1994), we cannot fully appreciate CS in the field until we also understand how different sparse representations will affect array optimization.

We also note that, unlike in the pixel basis, the random perturbation of the YOPP array (not shown) does not significantly decrease the RE or blocking effect of the “Y” array’s reconstructions (unless the perturbation is so severe the original “Y” framework is no longer recognizable). This is likely because the YOPP was designed to emulate a URA, the superior array for the pixel basis, rather than an NRA, the superior array for the BDCT: the largest baselines were perturbed, corresponding to the URA’s higher proportion of larger baselines compared to the NRA. Developing a different modification scheme for the “Y” array with the BDCT, such that it better emulates an NRA, is therefore of interest.

|

Fig. 13 OMP reconstructions of the object a), radio emission from M87, using the BDCT as the sparsifying basis. The original object is 120 × 120 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.2876), an NRA of 0.14 in c) (RE = 0.002), and the VLA-based “Y” array in d) (RE = 0.009). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/57 (courtesy of NRAO/AUI and F.N. Owen, J.A. Eilek, and N.E. Kassim). |

|

Fig. 14 OMP reconstructions of the object a), radio emission from 3C58, using the BDCT as the sparsifying basis. The original object is 160 × 160 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.0082), an NRA of 0.14 in c) (RE = 0.0028), and the VLA-based “Y” array in d) (RE = 0.0137). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/529 (courtesy of NRAO/AUI and M. Bietenholz, York University). |

7. Conclusions

In the pixel basis, we showed that the URA optimizes the performance indicator MC and sets the benchmark in OMP reconstructions of both point and extended sources. Though this contradicts the general agreement on centrally condensed arrays in the well-sampled case, it is linked to previous results that arrays with small numbers of antennas should aim for a more uniform baseline distribution (Boone 2002; Holdaway 1997; Woody 2001a) so that the near-in side-lobes do not overpower the far side-lobes. The URA also showed promise in allowing for super-resolution in CS recovery, whereas the performance of all other tested arrays decayed rapidly in the under-resolved case.

When we used the BDCT to amplify object sparsity, despite giving significantly higher MC an NRA outperforms the URA to provide the most faithful reconstructions of extended sources. This conveniently coincides with the preference for centrally condensed arrays in the well-sampled case. Such results also reveal the nearsightedness of solely analyzing MC, as is often done in optimizing sample distributions for CS, since this indicator does not preserve its physical meaning among different sparse representations. An array’s MC is analogous to its peak side-lobe in the pixel and other spike-like bases, but cannot be interpreted the same way in other bases.

We also highlighted the YOPP array as a model for enhancing the VLA’s “Y” configuration with a deceptively trivial amount of randomization, as it emulates a URA in reconstructing both point and extended sources with the pixel basis. The principle of slight perturbation could also apply to other patterned arrays, making them far more conducive to CS while preserving their practicality. This aligns with the early fundamental observation in Lo (1964) that a significantly lower number of randomly spaced sensors are needed than regularly spaced sensors to achieve the same low level of side-lobes. In the context of CS, when we are constrained by a highly insufficient number of sensors, this principle becomes indispensable.

Our results have general implications and serve as an introductory look into arrays for CS in radio interferometry. Various factors can be studied further, in particular the sparsifying basis, which is critical to any application of CS. That sparsifying bases can incorporate redundancy make them far more powerful than the pixel basis at representing natural images sparsely, and finding the optimal sparse representation for astronomical objects will clearly improve CS performance. Array design with respect to wavelet transforms and frames, such as the isotropic undecimated wavelet transform (Starck et al. 2006), is of interest: unlike the BDCT, such multiresolution approaches avoid the issue of optimizing a block size, which depends on the scale of the structures being imaged. The CS recovery algorithm is also a key variable. OMP was chosen here because of its parallels to CLEAN, as well as its superior computational efficiency compared to the optimization-based BP and LASSO, which is critical in dealing with large data. However, these optimization approaches, as well as adaptations of the basic OMP framework, may respond to arrays differently. Also important is incorporating Earth-rotation aperture synthesis, and running reconstructions on real rather than simulated data. As we look to deal with new floods of data from massive arrays, including the ALMA and the SKA, addressing these factors in array design for CS will become increasingly critical.

Acknowledgments

The author is grateful for financial support of the work from the Intel Science Talent Search, the Intel International Science and Engineering Fair, and the Junior Science & Humanities Symposium.

References

- Boone, F. 2002, A&A, 386, 1160 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Candès, E., & Plan, Y. 2009, Ann. Stat., 37, 2145 [CrossRef] [Google Scholar]

- Candès, E., & Tao, T. 2005, IEEE Trans. Inform. Theory, 51, 4203 [CrossRef] [Google Scholar]

- Candès, E., Romberg, J., & Tao, T. 2006a, Commun. Pure Appl. Math., 59, 1207 [CrossRef] [MathSciNet] [Google Scholar]

- Candès, E., Romberg, J., & Tao, T. 2006b, IEEE Trans. Inform. Theory, 52, 489 [CrossRef] [Google Scholar]

- Chen, S., Donoho, D., & Saunders, M. 1998, SIAM J. Sci. Comput., 20, 33 [Google Scholar]

- Clark, B. G. 1980, A&A, 89, 377 [NASA ADS] [Google Scholar]

- Davenport, M. A., Duarte, M. F., Eldar, Y. C., & Kutyniok, G. 2012, in Compressed Sensing: Theory and Applications (Cambridge University Press) [Google Scholar]

- Davis, G., Mallat, S., & Zhang, Z. 1994, Opt. Eng., 33, 2183 [NASA ADS] [CrossRef] [Google Scholar]

- Davis, G. M., Mallat, S., & Avellaneda, M. 1997, Constr. Approx, 13, 57 [Google Scholar]

- Dollet, C., Bijaoui, A., & Mignard, F. 2004, A&A, 426, 729 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Donoho, D. L. 2006, IEEE Trans. Inform. Theory, 52, 1289 [CrossRef] [MathSciNet] [Google Scholar]

- Donoho, D. L., Elad, M., & Temlyakov, V. 2006, IEEE Trans. Inform. Theory, 52, 6 [Google Scholar]

- Fannjiang, C. 2011, The Leading Edge, 30, 996 [CrossRef] [Google Scholar]

- Geršgorin, S. 1931, Izv. Akad. Nauk SSSR Ser. Fiz.-Mat., 6, 749 [Google Scholar]

- Högbom, J. A. 1974, A&AS, 15, 417 [NASA ADS] [Google Scholar]

- Holdaway, M. A. 1996, MMA Memo No. 156 [Google Scholar]

- Holdaway, M. A. 1997, MMA Memo No. 172 [Google Scholar]

- Kogan, L. 1997, MMA Memo No. 171 [Google Scholar]

- Lannes, A., Anterrieu, E., & Maréchal, P. 1997, A&AS, 123, 183 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Li, F., Cornwell, J., & de Hoog, F. 2011, A&A, 528, A31 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Lo, T. 1964, IEEE Trans. Ant. Prop., 12, 257 [NASA ADS] [CrossRef] [Google Scholar]

- McEwen, J. D., & Wiaux, Y. 2011, MNRAS, 413, 1318 [NASA ADS] [CrossRef] [Google Scholar]

- Pati, Y. C., Renzaiifar, R., & Krishnaprasad, P. S. 1993, in Proc. 27th Asilomar Conf. on Signals, Systems, and Computers, 40 [Google Scholar]

- Polygiannakis, J., Preka-Papadema, P., & Moussas, X. 2003, MNRAS, 343, 725 [NASA ADS] [CrossRef] [Google Scholar]

- Starck, J.-L., Bijaoui, A., Lopez, B., & Perrier, C. 1994, A&A, 283, 394 [Google Scholar]

- Starck, J.-L., Mouden, Y., Abrial, P., & Nguyen, M. 2006, A&A, 446, 1191 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Thompson, A. R., Moran, J. M., & Swenson, G. W. 2001, Interferometry and synthesis in radio astronomy (Wiley-VCH) [Google Scholar]

- Tibshirani, R. 1996, J. Roy. Stat. Soc. B, 58, 267 [Google Scholar]

- Tropp, J. A., & Gilbert, A. C. 2007, IEEE Trans. Inform. Theory, 53, 4655 [Google Scholar]

- Wenger, S., Darabi, S., Sen, P., Glaßmeier, K., & Magnor, M. 2010, Proc. IEEE ICIP, 1381 [Google Scholar]

- Wiaux, Y., Jacques, L., Puy, G., Scaife, A. M. M., & Vandergheynst, P. 2009, MNRAS, 395, 1733 [NASA ADS] [CrossRef] [Google Scholar]

- Woody, D. 2001a, ALMA Memo No. 389 [Google Scholar]

- Woody, D. 2001b, ALMA Memo No. 390 [Google Scholar]

All Figures

|

Fig. 1 Antenna distribution of a URA (top) and its baseline distribution (bottom). |

| In the text | |

|

Fig. 2 Antennas distributed according to the Hammersley point set (top) and the corresponding baseline distribution (bottom). |

| In the text | |

|

Fig. 3 Antenna distribution of an NRA with SD = 0.18 (top) and its baseline distribution (bottom). |

| In the text | |

|

Fig. 4 Antenna distribution of the modified array “YOPP” (top) and its baseline distribution (bottom), compared to the antenna and baseline distribution of the VLA-based “Y” array. Original versions of these figures appeared in Fannjiang (2011). |

| In the text | |

|

Fig. 5 Mutual coherence of measurement matrices as the number of antennas in the array increases (top) and rate of successful OMP reconstructions of random point sources with arrays of 27 antennas (bottom). For the randomized arrays (URA, NRA, and YOPP), the MC plotted is the mean of 50 independent instances of the array. The reconstruction rate plotted is the mean of 5 independent instances of the array, on 200 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. Original versions of these figures appeared in Fannjiang (2011). |

| In the text | |

|

Fig. 6 Mutual coherence of measurement matrices as the number of antennas in the array increases (top) and rate of successful OMP reconstructions of random point sources (bottom) as the standard deviation (SD) of the normal random array is increased. The MC plotted is the mean of 50 independent instances of each array. The reconstruction rate plotted is the mean of 5 independent instances of each array, on 200 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. |

| In the text | |

|

Fig. 7 OMP reconstructions with the pixel basis of the object a), radio emission from 3C75, simulated with measurements from a URA in b) (RE = 1.69 × 10-4), an NRA of SD = 0.14 in c) (RE = 0.763), an NRA of SD = 0.18 in d) (RE = 0.0150), the VLA-based “Y” array in e) (RE = 0.179), and the YOPP array in f) (RE = 0.0187). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/29 (courtesy of NRAO/AUI and F.N. Owen, C.P. O’Dea, M. Inoue, and J. Eilek). Original versions of a)–c), e), and f) appeared in Fannjiang (2011). |

| In the text | |

|

Fig. 8 Mutual coherence of measurement matrices by aperture size (top) and rate of successful OMP reconstructions of random point sources (bottom) in the under-resolved case. Both experiments are with arrays of 27 antennas. In the top panel, the x-axis gives the ratio of the aperture size in the under-resolved case to the aperture size in the well-resolved case. In the bottom panel, this relative aperture size is 0.5. For the randomized arrays (URA, NRA, and YOPP), the MC plotted is the mean of 50 independent instances of the array. The reconstruction rate plotted is the mean of 5 independent instances of the array, on 500 independent trials for each level of sparsity. A reconstruction is deemed successful if the relative error RE ≤ 0.01. Original versions of these figures appeared in Fannjiang (2011). |

| In the text | |

|

Fig. 9 OMP reconstructions with the pixel basis of the object from Fig. 7a, radio emission from 3C75, simulated with measurements from a URA in

a) (RE = 2.89 × 10-4), an NRA of

SD = 0.18 in b) (RE = 5.4617), the

“Y” array in c) (RE = 0.840), and the YOPP array in

d) in the under-resolved case when

|

| In the text | |

|

Fig. 10 Mutual coherence of measurement matrices as the number of antennas in the array increases, using the BDCT matrix as the basis matrix Ψ. For the randomized arrays (URA, NRA), the MC plotted is the mean of 50 independent instances of the array. |

| In the text | |

|

Fig. 11 OMP reconstructions of the object a), radio emission from the Crab Nebula, using the BDCT as the sparsifying basis. The original object is 120 × 120 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.0633), an NRA of 0.14 in c) (RE = 0.0378), and the VLA-based “Y” array in d) (RE = 0.0752). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/393 (courtesy of NRAO/AUI and M. Bietenholz). |

| In the text | |

|

Fig. 12 OMP reconstructions of the object a), radio emission from Cassiopeia A, using the BDCT as the sparsifying basis. The original object is 200 × 200 pixels and measurements are simulated from 180 antennas. Reconstructions are run with measurements from a URA in b) (0.0434), an NRA of 0.14 in c) (RE = 0.0033), and the VLA-based “Y” array in d) (RE = 0.0119). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/395 (courtesy of NRAO/AUI). |

| In the text | |

|

Fig. 13 OMP reconstructions of the object a), radio emission from M87, using the BDCT as the sparsifying basis. The original object is 120 × 120 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.2876), an NRA of 0.14 in c) (RE = 0.002), and the VLA-based “Y” array in d) (RE = 0.009). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/57 (courtesy of NRAO/AUI and F.N. Owen, J.A. Eilek, and N.E. Kassim). |

| In the text | |

|

Fig. 14 OMP reconstructions of the object a), radio emission from 3C58, using the BDCT as the sparsifying basis. The original object is 160 × 160 pixels and measurements are simulated from 100 antennas. Reconstructions are run with measurements from a URA in b) (RE = 0.0082), an NRA of 0.14 in c) (RE = 0.0028), and the VLA-based “Y” array in d) (RE = 0.0137). All pixel brightness values in the object and reconstructions are normalized by the object’s maximum pixel brightness value, to lie on [0, 1]. Image a) can be found at http://images.nrao.edu/529 (courtesy of NRAO/AUI and M. Bietenholz, York University). |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.