| Issue |

A&A

Volume 534, October 2011

|

|

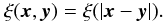

|---|---|---|

| Article Number | A76 | |

| Number of page(s) | 13 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/201117284 | |

| Published online | 05 October 2011 | |

Constrained probability distributions of correlation functions

1

Argelander-Institut für Astronomie, Universität Bonn, Auf dem Hügel 71, 53121 Bonn, Germany

e-mail: peter@astro.uni-bonn.de

2

Max-Planck-Institut für Gravitationsphysik, Callinstraße 38, 30167 Hannover, Germany

e-mail: david.keitel@aei.mpg.de

Received: 18 May 2011

Accepted: 11 August 2011

Context. Two-point correlation functions are used throughout cosmology as a measure for the statistics of random fields. When used in Bayesian parameter estimation, their likelihood function is usually replaced by a Gaussian approximation. However, this has been shown to be insufficient.

Aims. For the case of Gaussian random fields, we search for an exact probability distribution of correlation functions, which could improve the accuracy of future data analyses.

Methods. We use a fully analytic approach, first expanding the random field in its Fourier modes, and then calculating the characteristic function. Finally, we derive the probability distribution function using integration by residues. We use a numerical implementation of the full analytic formula to discuss the behaviour of this function.

Results. We derive the univariate and bivariate probability distribution function of the correlation functions of a Gaussian random field, and outline how higher joint distributions could be calculated. We give the results in the form of mode expansions, but in one special case we also find a closed-form expression. We calculate the moments of the distribution and, in the univariate case, we discuss the Edgeworth expansion approximation. We also comment on the difficulties in a fast and exact numerical implementation of our results, and on possible future applications.

Key words: large-scale structure of Universe / methods: statistical / gravitational lensing: weak / galaxies: statistics / cosmology: miscellaneous

© ESO, 2011

1. Introduction

In several fields of science, there are observations that can be modelled as random processes, either time series or spatial random fields. In cosmology, mostly random fields are of interest, for example as the density perturbation field. Therefore, we use the language of random fields in this article. Still, all results are applicable to time series as well.

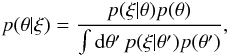

An important statistical quantity of random fields is the two-point correlation function, labelled ξ(x) in the following, with x being the separation between two points of the field. When the empirical correlation function has been measured, it can be used to estimate the parameters of a theoretical model for the random field. The standard method for this, at least in cosmology, is Bayesian inference, using Bayes’ theorem:  (1)where θ are the model parameters, p(θ|ξ) is the posterior probability of some parameters given the data ξ, p(ξ|θ) is called the likelihood and p(θ) is the prior probability of the parameters. The prior can be chosen, more or less freely, by several schemes, and the integral in the denominator is usually not relevant for parameter estimation studies, as only posterior ratios are necessary. But it is crucial to the Bayesian analysis to determine the correct likelihood function for the problem beforehand, a process also known as forward modelling.

(1)where θ are the model parameters, p(θ|ξ) is the posterior probability of some parameters given the data ξ, p(ξ|θ) is called the likelihood and p(θ) is the prior probability of the parameters. The prior can be chosen, more or less freely, by several schemes, and the integral in the denominator is usually not relevant for parameter estimation studies, as only posterior ratios are necessary. But it is crucial to the Bayesian analysis to determine the correct likelihood function for the problem beforehand, a process also known as forward modelling.

However, in many applications, such as those most relevant for cosmology, it is very difficult to obtain the correct likelihood function either empirically or theoretically. Therefore, in those cases where the underlying random field is assumed to be Gaussian, it has been standard practice for some time to simply use a Gaussian approximation for the likelihood, as well. Examples include Fu et al. (2008) in a cosmic shear analysis, Okumura et al. (2008) for luminous red galaxy counts, and Seljak & Bertschinger (1993) for the cosmic microwave background correlation function.

There is no a priori reason to assume that the Gaussian approximation should be exact, or even very accurate. In fact, it was shown by Hartlap et al. (2009) that there is a significant deviation between the real likelihood and a Gaussian for simulated cosmic shear data. In this case, the error bars on cosmological parameters improved by 10–40% when using a non-Gaussian likelihood. This result encouraged a mathematical study on the properties of correlation functions of Gaussian random fields. Schneider & Hartlap (2009) found that these correlation functions cannot take arbitrary values, but are subject to constraints. This can be seen from first studying the power spectrum of the field, which is Fourier conjugate to the correlation function. Power spectra are always non-negative, P(k) ≥ 0 for all wave vectors k.

If the correlation function is measured over a separation vector (or “lag parameter”) x and its integer multiples nx, n ∈ N, the non-negativity of P(k) leads to constraints in the form of inequalities rnl ≤ rn ≤ rnu. Here, rn = ξ(nx)/ξ(0) is the “correlation coefficient” normalised by the correlation at zero separation, and the upper and lower boundaries rnl and rnu are functions of all ri with i < n. The three lowest order constraints are 0 ≤ ξ(0), − 1 ≤ r1 ≤ 1 and  .

.

Since a Gaussian or multivariate Gaussian is unbounded, the existence of the constraints already demonstrates that the real likelihood function cannot be exactly Gaussian, but at least must have its tails cut off. Still, the constraints do not immediately lead to a full description of the likelihood function. Another study by Sato et al. (2011) has used the copula approach to construct a more realistic likelihood function for large-scale structure data. However, this approach is mostly useful for numerical application and does not yield direct insights into the analytical structure of the likelihood function. Also, a preliminary investigation by Wilking & Schneider (in prep.) has shown that a simple approach using a Gaussian copula cannot correctly reproduce the likelihood under constraints. Therefore, in this article we will focus on a simple type of random fields, but try to find a fully analytical expression for the likelihood function, or probability distribution, of correlation functions, and will demonstrate that it is indeed manifestly non-Gaussian.

For this derivation, we concentrate on Gaussian random fields, since their unique properties allow for a fully analytical calculation. In addition, a Gaussian field is fully specified by its two-point statistics, i.e. ξ(x) or equivalently P(k), which makes the derivation of their probability distributions especially rewarding, since then P(k) determines the full set of joint probability distributions p(ξ1), p(ξ1,ξ2), p(ξ1,ξ2,ξ3) and so on, with ξi = ξ(xi).

This article consists of five main sections, apart from this introduction. In Sect. 2, we derive the univariate probability distribution function. We also calculate its moments and present explicit results for a special power spectrum. Then, we repeat the derivation for bivariate distributions in Sect. 3, also discussing its moments. We go on to discuss possible numerical implementations of these results in Sect. 4. Using numerical evaluation, we can discuss the properties of the distribution functions in more detail in Sect. 5. There, we comment on the general analytical properties of the uni- and bivariate functions. We also use the moments to construct an Edgeworth expansion of the univariate distribution, and we generalise our derivations and results to higher dimensions. We conclude the article in Sect. 6.

2. Univariate distribution

2.1. Derivation

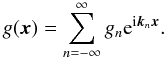

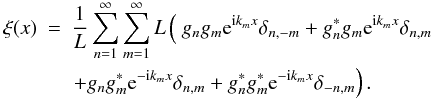

We describe a real Gaussian random field by its Fourier decomposition  (2)The Fourier modes are independently distributed, each with a Gaussian probability distribution:

(2)The Fourier modes are independently distributed, each with a Gaussian probability distribution:  (3)The mode dispersions are determined by the power spectrum,

(3)The mode dispersions are determined by the power spectrum,  (4)The following derivations will be independent of the choice of a power spectrum, as long as it obeys the constraint of non-negativity. So the σn are arbitrary parameters.

(4)The following derivations will be independent of the choice of a power spectrum, as long as it obeys the constraint of non-negativity. So the σn are arbitrary parameters.

As is usually done in numerical simulations, we consider a finite field, x ∈ [0,L] Ndim, with periodic boundary conditions. A sufficiently large finite field will be representative of a field on the whole of RNdim if the random field has no power on scales larger than L. This is also equivalent to the assumption of statistical homogeneity on these scales. Together with isotropy, we know that the correlation function depends on the distance modulus, or lag parameter, only:  (5)We will now concentrate on a one-dimensional field to keep expressions simple. However, in Sect. 5.4 we will see that all results also hold for higher dimensions. We start with an estimator for the correlation function from the finite field, given by

(5)We will now concentrate on a one-dimensional field to keep expressions simple. However, in Sect. 5.4 we will see that all results also hold for higher dimensions. We start with an estimator for the correlation function from the finite field, given by  (6)which approaches the true value for L → ∞.

(6)which approaches the true value for L → ∞.

Going to k-space, the Fourier modes that fit inside the interval [0,L] are discrete. Each wave number kn has to fulfil the condition eiknL = 1, so that  (7)with n ∈ N. For a real-valued random field, the Fourier components fulfil

(7)with n ∈ N. For a real-valued random field, the Fourier components fulfil  . Using this property, the mode expansion of the field can be split up as

. Using this property, the mode expansion of the field can be split up as  (8)Without loss of generality, we assume that the field has zero mean, since we can always achieve this by a simple transformation. Then, the zero mode g0 cancels out. We can then insert his expansion into the estimator (6). For the spatial integrals, we can use the integral representation of the Kronecker delta symbol:

(8)Without loss of generality, we assume that the field has zero mean, since we can always achieve this by a simple transformation. Then, the zero mode g0 cancels out. We can then insert his expansion into the estimator (6). For the spatial integrals, we can use the integral representation of the Kronecker delta symbol:  (9)The correlation function is then given by

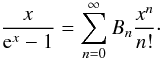

(9)The correlation function is then given by  (10)Executing the sum over m, only half of the terms survive, and the remaining exponentials give a cosine function:

(10)Executing the sum over m, only half of the terms survive, and the remaining exponentials give a cosine function:  (11)Now that we have a convenient expression for (the estimator of) the correlation function, we need to take one more intermediate step before calculating its probability distribution. This is the characteristic function, which, in general, is defined as the Fourier transform of a probability distribution function. For the given random field, we can calculate the characteristic function by means of an ensemble average:

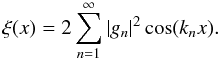

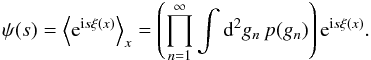

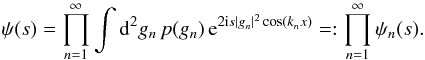

(11)Now that we have a convenient expression for (the estimator of) the correlation function, we need to take one more intermediate step before calculating its probability distribution. This is the characteristic function, which, in general, is defined as the Fourier transform of a probability distribution function. For the given random field, we can calculate the characteristic function by means of an ensemble average:  (12)Since the field is Gaussian, the modes are independently distributed, and the probability distribution factorises. Inserting (11), we get

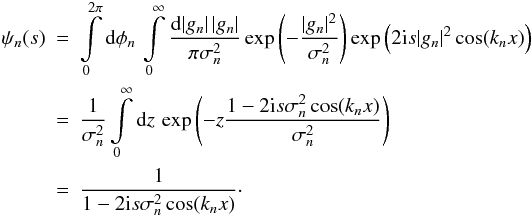

(12)Since the field is Gaussian, the modes are independently distributed, and the probability distribution factorises. Inserting (11), we get  (13)In the individual factors ψn(s), we substitute z = |gn|2 to solve the integral:

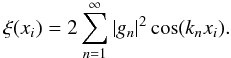

(13)In the individual factors ψn(s), we substitute z = |gn|2 to solve the integral:  (14)With the product over all modes, we obtain the full characteristic function as

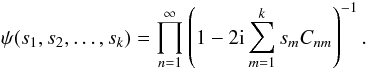

(14)With the product over all modes, we obtain the full characteristic function as  (15)where in the last step we introduced the shorthand notation

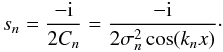

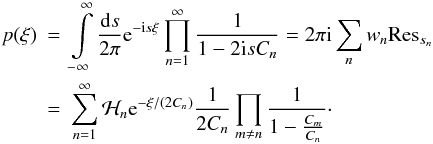

(15)where in the last step we introduced the shorthand notation  (16)The characteristic function is an important result in its own right, since we can use it to calculate the moments of the distribution, which we will do in Sect. 2.2. But for now, we go on to calculate the probability density distribution p(ξ) by an inverse Fourier transform:

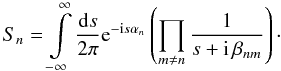

(16)The characteristic function is an important result in its own right, since we can use it to calculate the moments of the distribution, which we will do in Sect. 2.2. But for now, we go on to calculate the probability density distribution p(ξ) by an inverse Fourier transform:  (17)We will solve this integral using the theorem of residues, since the integrand is analytic except at its poles

(17)We will solve this integral using the theorem of residues, since the integrand is analytic except at its poles  (18)All of these lie on the imaginary axis. However, if cos(knx) = 0 for some n, then the corresponding factor in the characteristic function is unity, and there is no pole and no contribution to the integral from this term. On the other hand, some Cn may be equal, and so there may be poles of higher order (multiple poles). These special cases will be discussed in Appendix A, but for now we focus on the standard case of N simple poles.

(18)All of these lie on the imaginary axis. However, if cos(knx) = 0 for some n, then the corresponding factor in the characteristic function is unity, and there is no pole and no contribution to the integral from this term. On the other hand, some Cn may be equal, and so there may be poles of higher order (multiple poles). These special cases will be discussed in Appendix A, but for now we focus on the standard case of N simple poles.

We can choose a contour of integration made up of two parts, a straight section [R,R] combined with a semicircle of radius R, which we parametrise by s = Reiφ, with either φ ∈ [0,π] for the upper or φ ∈ [π,2π] for the lower half-plane. To get the full integral for p(ξ), we have to take the limit of R → ∞. The numerator of the integrand is e − isξ, whereas the denominator, after executing the product, is only a polynomial in s. So the numerator dominates the convergence behaviour, requiring ℜ( − isξ) < 0. For ξ > 0, this corresponds to ℑ(s) < 0, and we can close the contour in the lower half-plane. If instead ξ < 0, the requirement is ℑ(s) > 0, and we can close the contour in the upper half-plane. So for a given ξ, only the poles lying in the corresponding half-plane contribute to the sum of residues. We encode this behaviour in the factor  (19)where for the Heaviside step function we use the convention

(19)where for the Heaviside step function we use the convention  (20)If all poles sn are simple, we can calculate the residues by

(20)If all poles sn are simple, we can calculate the residues by  (21)Inserting the winding numbers wn = 1 for the upper and wn = −1 for the lower contour, the full integral is then

(21)Inserting the winding numbers wn = 1 for the upper and wn = −1 for the lower contour, the full integral is then  (22)This result holds for most relevant combinations of input power spectra and lag parameters. For other cases, we derive a generalised result of a very similar functional form, that also holds for multiple poles, in Appendix A.

(22)This result holds for most relevant combinations of input power spectra and lag parameters. For other cases, we derive a generalised result of a very similar functional form, that also holds for multiple poles, in Appendix A.

We were unable to further simplify the limit of the infinite sum in the probability distribution function (22). However, as long as the power spectrum decreases at least like k-2 for large k, our numerical implementation of the sum formulae, as described in Sect. 4, showed that the probability distribution function converges as well. In practice, it is therefore possible to truncate the series at some maximum mode number N without losing much precision.

Also, it is obvious from Eq. (22) that for large ξ, a single mode will always dominate the sum, so that asymptotically, the distribution is not Gaussian, p(ξ) ∝ e − ξ2, but instead exponential, p(ξ) ∝ e − ξ/(2Cmax).

We also note at this point that Eq. (22) depends on the field size L, separation x and power spectrum P(|kn|) only through the ratios x/L and P(|kn|)/L, as can be seen from the definition of Cn in Eq. (16). When we present numerical results in the further course of this article, we therefore give these quantities only. Furthermore, in the case of a Gaussian power spectrum,  (23)we can directly state L σP as the relevant quantity.

(23)we can directly state L σP as the relevant quantity.

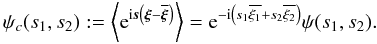

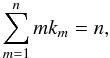

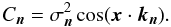

For such a power spectrum with L σP = 150 and a separation x = 0, Fig. 1 demonstrates the convergence behaviour of the distribution function. In this case, the calculations with N = 64 and N = 128 produce virtually indistinguishable results, so that the former mode number is sufficient for all practical purposes. In general, the steepness of the power spectrum determines which maximum wave number N is necessary: Inserting the wave numbers from Eq. (7) into (23), we see that N ~ L σP/2 is sufficient for Gaussian power spectra.

|

Fig. 1 Univariate distributions for different mode numbers N, demonstrating convergence. All distributions have a Gaussian power spectrum with L σP = 150 and lag x = 0. With maxima from left to right: solid: N = 1, long-dashed: N = 2, dashed: N = 4, dotted: N = 8, long-dashed-dotted: N = 16, dashed-dotted: N = 32, double-dashed: N = 64, long-dashed-double-dotted: N = 128. |

2.2. Moments

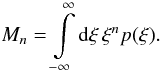

In this section, we calculate the moments of the distribution. Apart from possible use in future applications, this is also useful as a check for the distribution function derived above, since we can derive the moments in two independent ways and compare results. First, we can get the moments Mk from the derivatives of the characteristic function (Kendall & Stuart 1977, p. 63): ![\begin{equation} M_k = \iu^{-k} \left. \deriv[k]{\psi(s)}{s} \right|_{s=0} = \iu^{-k} \left. \deriv[k]{}{s} \prod\limits_{n=1}^{\infty} \frac{1}{1 - 2 \iu s C_n} \right|_{s=0} \cdot \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq105.png) (24)The first derivative yields the mean of the distribution, or the expectation value of ξ:

(24)The first derivative yields the mean of the distribution, or the expectation value of ξ:  (25)For the variance and other higher-order quantities, we use the central moments, which are the moments of the distribution of

(25)For the variance and other higher-order quantities, we use the central moments, which are the moments of the distribution of  . The centralised characteristic function is simply

. The centralised characteristic function is simply  (26)and it yields the central moments as (Kendall & Stuart 1977, pp. 57, 63)

(26)and it yields the central moments as (Kendall & Stuart 1977, pp. 57, 63) ![\begin{equation} M_{\mathrm{c}k} = \iu^{-k} \left. \deriv[k]{\psi_{\rm c}(s)}{s} \right|_{s=0} \cdot \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq109.png) (27)The first six non-zero central moments are then

(27)The first six non-zero central moments are then ![\begin{eqnarray} \label{eq:moments_momc2} M_{\mathrm{c}2} &=& 4 \sum\limits_{n=1}^{\infty} C_n^2, \\[1.5mm] M_{\mathrm{c}3} &=& 16 \sum\limits_{n=1}^{\infty} C_n^3 , \\[1.5mm] M_{\mathrm{c}4} &=& 48 \sum\limits_{n=1}^{\infty} \left( 3C_n^4 + 2C_n^2 \sum\limits_{m > n}C_m^2 \right) , \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq110.png)

![\begin{eqnarray} \nonumber\\[-7.5mm] M_{\mathrm{c}5} &=& 128 \sum\limits_{n=1}^{\infty} \left( 11C_n^5 + 5C_n^3 \sum\limits_{m \neq n}C_m^2 \right) , \\[1.5mm] M_{\mathrm{c}6} &=& 320 \sum\limits_{n=1}^{\infty} \left( \ 53C_n^6 + 27C_n^4 \sum\limits_{m \neq n}C_m^2 + 16C_n^3 \sum\limits_{m > n}C_m^3 \right. \nonumber \\[1.5mm] && \left. + 18 \sum\limits_{k > m > n}\left(C_nC_mC_k\right)^2 \right) . \label{eq:moments_momc6} \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq111.png) From these moments, we can also obtain some conventional statistical quantities: the variance V(ξ) = Mc2, the standard deviation

From these moments, we can also obtain some conventional statistical quantities: the variance V(ξ) = Mc2, the standard deviation  , the skewness S(ξ) = Mc3/σ3 and the kurtosis K(ξ) = Mc4/σ4 − 3.

, the skewness S(ξ) = Mc3/σ3 and the kurtosis K(ξ) = Mc4/σ4 − 3.

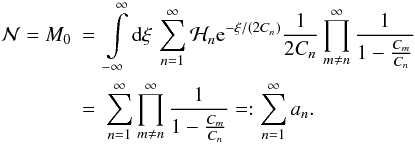

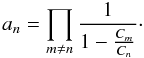

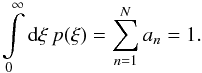

Alternatively, we can also calculate the moments from the probability distribution function by the integrals  (33)An important check for the sanity of the distribution function will be to re-obtain the normalisation as unity by this approach. We can compute it as the moment of order zero:

(33)An important check for the sanity of the distribution function will be to re-obtain the normalisation as unity by this approach. We can compute it as the moment of order zero:  (34)We can evaluate this sum of products by considering another Fourier transform from p(ξ) back to ψ(s):

(34)We can evaluate this sum of products by considering another Fourier transform from p(ξ) back to ψ(s): ![\begin{eqnarray} \psi(s) &=& \int\limits_{-\infty}^{\infty} \diff{\xi} \eto{\iu s \xi} p(\xi) \nonumber \\ &=& \sum\limits_{n=1}^{\infty} \frac{a_n}{2C_n} \int\limits_{-\infty}^{\infty} \diff{\xi} \mathcal{H}_n \eto{\left[ \iu s - 1/(2C_n) \right] \xi} \nonumber \\ &=& \sum\limits_{C_n>0} \frac{a_n}{2C_n} \int\limits_{0}^{\infty} \diff{\xi} \eto{\left[ \iu s - 1/(2C_n) \right] \xi} - \sum\limits_{C_n<0} \frac{a_n}{2C_n} \int\limits_{-\infty}^{0} \diff{\xi} \eto{\left[ \iu s - 1/(2C_n) \right] \xi} \nonumber \\ &=& -\sum\limits_{C_n>0} \frac{a_n}{2C_n} \frac{1}{\iu s -\frac{1}{2C_n}} - \sum\limits_{C_n<0} \frac{a_n}{2C_n} \frac{1}{\iu s -\frac{1}{2C_n}} \nonumber \\ &= &-\sum\limits_{n=1}^{\infty} \frac{a_n}{2C_n \left( \iu s -\frac{1}{2C_n} \right)} = \sum\limits_{n=1}^{\infty} \frac{a_n}{1 - 2 \iu s C_n}\cdot \label{eq:pxi_moments_backtrafo} \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq119.png) (35)Comparing this expression for the characteristic function to the original from Eq. (15), we get

(35)Comparing this expression for the characteristic function to the original from Eq. (15), we get  (36)Evaluation at s = 0 yields

(36)Evaluation at s = 0 yields  (37)so that together with Eq. (34) we have shown that

(37)so that together with Eq. (34) we have shown that  .

.

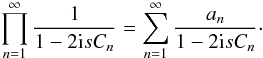

To calculate the higher moments, we make use of the integral  (38)and of sum formulae of the type

(38)and of sum formulae of the type ![\begin{eqnarray} \sum\limits_{n=1}^{N}&\!\!\!\!\!& C_n \prod\limits_{m \neq n}^N \frac{1}{1-\frac{C_m}{C_n}} = \sum\limits_{n=1}^{N} C_n , \\[2.5mm] \sum\limits_{n=1}^{N} &\!\!\!\!\!&C_n^2 \prod\limits_{m \neq n}^N \frac{1}{1-\frac{C_m}{C_n}} = \sum\limits_{n=1}^{N} C_n \sum\limits_{m=n}^{N} C_m , \\[2.5mm] \sum\limits_{n=1}^{N} &\!\!\!\!\!&C_n^3 \prod\limits_{m \neq n}^N \frac{1}{1-\frac{C_m}{C_n}} = \sum\limits_{n=1}^{N} C_n \sum\limits_{m=n}^{N} C_m \sum\limits_{k=m}^{N} C_k . \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq125.png) These follow from taking derivatives with respect to s in Eq. (36) and setting s = 0. We have checked the results for mean, variance, skewness and kurtosis and have reproduced the results of the characteristic function approach, demonstrating the validity of the probability distribution function.

These follow from taking derivatives with respect to s in Eq. (36) and setting s = 0. We have checked the results for mean, variance, skewness and kurtosis and have reproduced the results of the characteristic function approach, demonstrating the validity of the probability distribution function.

2.3. Cumulative distribution function

From the probability distribution function (22), we can also directly calculate the cumulative distribution function, defined as F(ξ) = P(ξ′ > ξ). For single poles only, it is given by ![\begin{eqnarray} F(\xi) &=& \int\limits_\xi^{\infty} \diff{\xi'} p(\xi') \nonumber \\[1.5mm] &=& \sum\limits_{n=1}^{\infty} \frac{1}{2C_n} \prod\limits_{m \neq n} \frac{1}{1-\frac{C_m}{C_n}} \left[ H(\xi) \int\limits_\xi^{\infty} \diff{\xi'} H(C_n) \, \eto{-\xi/(2C_n)} \right. \nonumber \\[1.5mm] &&\left. \quad - H(-\xi) \left( \int\limits_\xi^0 \diff{\xi'} + \int\limits_0^{\infty} \diff{\xi'} \right) H(-C_n) \, \eto{-\xi/(2C_n)} \right] \nonumber \\[1.5mm] &=& \sum\limits_{n=1}^{\infty} \left( \mathcal{H}_n \eto{-\xi/(2C_n)} + H(-\xi) \right) \prod\limits_{n \neq m} \frac{1}{1-\frac{C_m}{C_n}} \cdot \label{eq:univar_cdf} \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq127.png) (42)Again, we use the notation ℋn = H(ξ)H(Cn) − H( − ξ)H( − Cn) for the Heaviside factor, but this time note the extra term of + H( − ξ). Analogous expressions in the presence of higher-order poles could be obtained by integrating the corresponding probability density (A.3).

(42)Again, we use the notation ℋn = H(ξ)H(Cn) − H( − ξ)H( − Cn) for the Heaviside factor, but this time note the extra term of + H( − ξ). Analogous expressions in the presence of higher-order poles could be obtained by integrating the corresponding probability density (A.3).

2.4. A special case – power-law power spectra

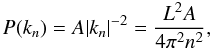

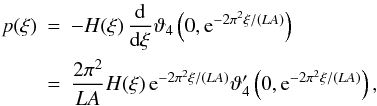

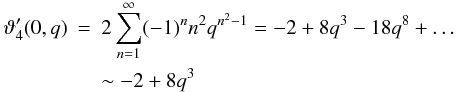

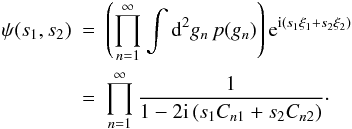

In general, the probability distribution function we found is a sum formula that needs to be evaluated numerically. However, if the power spectrum of the underlying random field is a power law P(k) ∝ |k| − ν, we can analytically find a more explicit expression for the univariate distribution function. In the case of  (43)with A a normalisation constant, and for a separation of x = 0, we have Cn = LA/(4π2n2) and the product factors are

(43)with A a normalisation constant, and for a separation of x = 0, we have Cn = LA/(4π2n2) and the product factors are  (44)which is a special case of the infinite product family (Prudnikov et al. 1986, p. 754)

(44)which is a special case of the infinite product family (Prudnikov et al. 1986, p. 754)  (45)The probability density function (at zero separation) is then

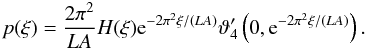

(45)The probability density function (at zero separation) is then  (46)where the field size L comes in through Eq. (4). We can now express the cumulative distribution function in terms of known functions as

(46)where the field size L comes in through Eq. (4). We can now express the cumulative distribution function in terms of known functions as ![\begin{eqnarray} F(\xi) &=& \int\limits_{\xi}^{\infty} \diff{\xi^{\prime}} p(\xi^{\prime}) = 2 H(\xi) \sum\limits_{n=1}^{\infty} (-1)^{n+1} \eto{-2\pi^2n^2\xi/(LA)} \nonumber \\ &=& H(\xi) \left[ 1 - \vartheta_4\left(0,\eto{-2\pi^2\xi/(LA)}\right) \right] . \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq137.png) (47)Here, ϑ4(0,q) is a special case of the Jacobi elliptic theta function, which is known to satisfy (Whittaker & Watson 1963, p. 463 ff.)

(47)Here, ϑ4(0,q) is a special case of the Jacobi elliptic theta function, which is known to satisfy (Whittaker & Watson 1963, p. 463 ff.) ![\begin{equation} \label{eq:univar_special_theta4} \sum\limits_{n=1}^{\infty} (-1)^n q^{n^2} = \frac{1}{2} \left[ -1 + \vartheta_4\left(0,q\right) \right] . \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq139.png) (48)We can then re-obtain the probability density function by differentiation,

(48)We can then re-obtain the probability density function by differentiation,  (49)where

(49)where  . It is not clear to us yet whether this connection to elliptical functions is a coincidence for just this special case, or whether it points towards a possible reformulation of the probability distribution for general power spectra.

. It is not clear to us yet whether this connection to elliptical functions is a coincidence for just this special case, or whether it points towards a possible reformulation of the probability distribution for general power spectra.

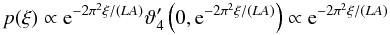

However, it allows us to analyse the asymptotic behaviour of the distribution function for large ξ. From Eq. (48), we have  (50)for q ≪ 1. Then, inserting into Eq. (50), we obtain

(50)for q ≪ 1. Then, inserting into Eq. (50), we obtain  (51)for ξ ≫ 1. Thus, the distribution function behaves like a single exponential at large ξ, and not like a Gaussian at all. This is in agreement with our general result p(ξ) ∝ e − ξ/(2Cmax) for large ξ from Sect. 2.1.

(51)for ξ ≫ 1. Thus, the distribution function behaves like a single exponential at large ξ, and not like a Gaussian at all. This is in agreement with our general result p(ξ) ∝ e − ξ/(2Cmax) for large ξ from Sect. 2.1.

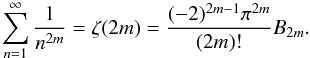

For the same power spectrum, we can also explicitly calculate the moments. With Cn ∝ P(|k|) ∝ |k|-2, we obtain the mth (central) moment from the sum  (52)Here, ζ(m) is the Riemann zeta function and Bm are the Bernoulli numbers (Prudnikov et al. 1986, p. 776) given by

(52)Here, ζ(m) is the Riemann zeta function and Bm are the Bernoulli numbers (Prudnikov et al. 1986, p. 776) given by  (53)For example, the mean of the distribution then is simply given by

(53)For example, the mean of the distribution then is simply given by  and the standard deviation is

and the standard deviation is  .

.

For power law power spectra with a different exponent, Eq. (45) still allows us to express the product factors explicitly in terms of known (trigonometric and hyperbolic) functions. Regrettably, these do not yield any known functions for the full probability distribution, or for the moments, as far as we are aware. Thus, a really explicit form was found for the special case of P(k) ∝ |k|-2 only.

3. Bivariate distribution

3.1. Derivation

In this section, we will calculate the bivariate probability distribution function p(ξ(x1),ξ(x2)), which we will mostly abbreviate as p(ξ1,ξ2) with ξ1 = ξ(x1), ξ2 = ξ(x2). All preliminaries carry over from the univariate case, and the starting point of this calculation is  (54)The characteristic function is now bivariate as well,

(54)The characteristic function is now bivariate as well,  (55)In the last step, we have defined a generalised shorthand for the factors

(55)In the last step, we have defined a generalised shorthand for the factors  to allow for the two different lag parameters xm. It is worth noting at this point that for higher multivariate distributions, say p(ξ1,ξ2,...,ξk), the only change necessary in the characteristic function will be to add additional terms of smCnm in this factor, resulting in the generally valid expression

to allow for the two different lag parameters xm. It is worth noting at this point that for higher multivariate distributions, say p(ξ1,ξ2,...,ξk), the only change necessary in the characteristic function will be to add additional terms of smCnm in this factor, resulting in the generally valid expression  (56)Next, we will obtain the bivariate probability distribution itself from the characteristic function by Fourier inversion, in analogy to the univariate case. But an important difference arises in this step, since the inversion now contains a double integration. Thus, we have to calculate the following:

(56)Next, we will obtain the bivariate probability distribution itself from the characteristic function by Fourier inversion, in analogy to the univariate case. But an important difference arises in this step, since the inversion now contains a double integration. Thus, we have to calculate the following: ![\begin{equation} p(\xi_1,\xi_2)=\int\limits_{-\infty}^{\infty} \frac{\fdiff{s_1}}{2\pi} \int\limits_{-\infty}^{\infty} \frac{\fdiff{s_2}}{2\pi} \frac{\eto{-\iu \left(s_1 \xi_1 + s_2 \xi_2 \right)}}{\prod\limits_{n=1}^{\infty} \left[ 1 - 2 \iu \left( s_1 C_{n1} + s_2 C_{n2} \right) \right] } \cdot \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq164.png) (57)Since the pairs of variables (s1,s2) and (ξ1,ξ2) each are mutually independent, the result has to be invariant under exchanging the order of integration. We choose to first integrate over ds2 and after that over ds1. From the resulting formula, the symmetry will not be immediately apparent. However, we have checked the equivalence of both approaches by also explicitly evaluating the other choice. Also, we will assume simple poles in both integrations, and will only briefly comment on the effects of multiple poles at the end of this section.

(57)Since the pairs of variables (s1,s2) and (ξ1,ξ2) each are mutually independent, the result has to be invariant under exchanging the order of integration. We choose to first integrate over ds2 and after that over ds1. From the resulting formula, the symmetry will not be immediately apparent. However, we have checked the equivalence of both approaches by also explicitly evaluating the other choice. Also, we will assume simple poles in both integrations, and will only briefly comment on the effects of multiple poles at the end of this section.

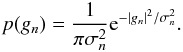

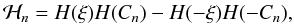

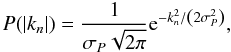

|

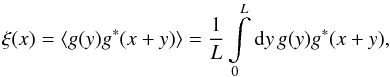

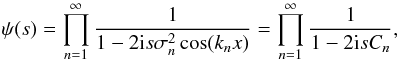

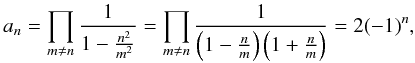

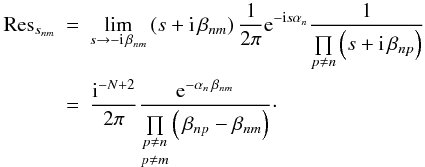

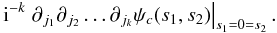

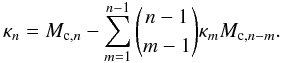

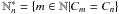

Fig. 2 Isoprobability contours of the bivariate distributions p(ξ1,ξ2) for a Gaussian power spectrum with L σP = 20 and 16 modes. Left panel: x/L = (0.15,0), right panel: x/L = (0.2,0.25). |

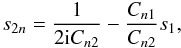

The poles for the inner integration are now located at  (58)and, for simple poles, their residues are

(58)and, for simple poles, their residues are ![\begin{eqnarray} {\rm Res}_{s_{2n}} & =& \lim\limits_{ s_2 \rightarrow \frac{1}{2 \iu C_{n2}}-\frac{C_{n1}}{C_{n2}}s_1 } \left[ \left( s_2-\frac{1}{2 \iu C_{n2}}+\frac{C_{n1}}{C_{n2}}s_1 \right) \frac{\eto{-\iu (s_1 \xi_1 + s_2 \xi_2)}}{2\pi} \right. \nonumber \\ && \left. \times \prod\limits_{m=1}^{\infty} \frac{1}{1 - 2 \iu (s_1 C_{m1} + s_2 C_{m2})} \right] \nonumber \\ & = & \frac{\iu}{2\pi} \frac{\eto{-\xi_2/\left(2C_{n2}\right) - \iu s_1 \left[ \xi_1-\left(C_{n1}/C_{n2}\right)\xi_2 \right]} }{2C_{n2}} \left( \prod\limits_{m \neq n} \frac{C_{n2}}{2 \iu D_{nm}} \frac{1}{s_1+\frac{C_{n2} - C_{m2}}{2 \iu D_{nm}}} \right){\cdot} \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq173.png) (59)Here we have simplified the expression by defining the determinant factor

(59)Here we have simplified the expression by defining the determinant factor  (60)The arguments as to the choice of contours apply exactly as before, since the imaginary part of the poles remains unchanged from Eqs. (18) to (58). Thus, poles with positive Cn2 factors lie within the lower contour, whereas those with negative Cn2 lie within the upper contour. The corresponding Heaviside factors encoding this behaviour are H(ξ2)H(Cn2) and H( − ξ2)H( − Cn2). The winding numbers are wn = 1 for the upper and wn = −1 for the lower contour. So we obtain the full integral as

(60)The arguments as to the choice of contours apply exactly as before, since the imaginary part of the poles remains unchanged from Eqs. (18) to (58). Thus, poles with positive Cn2 factors lie within the lower contour, whereas those with negative Cn2 lie within the upper contour. The corresponding Heaviside factors encoding this behaviour are H(ξ2)H(Cn2) and H( − ξ2)H( − Cn2). The winding numbers are wn = 1 for the upper and wn = −1 for the lower contour. So we obtain the full integral as ![\begin{eqnarray} p(\xi_1,\xi_2) &=& \int\limits_{-\infty}^{\infty} \frac{\fdiff{s_1}}{2\pi} \int\limits_{-\infty}^{\infty} \frac{\fdiff{s_2}}{2\pi} \eto{-\iu (s_1 \xi_1 + s_2 \xi_2)} \prod\limits_{n=1}^{\infty} \frac{1}{1 - 2 \iu (s_1 C_{n1} + s_2 C_{n2})} \nonumber \\ & =& \int\limits_{-\infty}^{\infty} \frac{\fdiff{s_1}}{2\pi} 2 \pi \iu \sum\limits_n w_n {\rm Res}_{s_{2n}} \nonumber \\ & =& \sum\limits_{n=1}^{\infty} \left[ H(\xi_2)H(C_{n2}) - H(-\xi_2)H(-C_{n2}) \right] \eto{-\xi_2/\left(2C_{n2}\right)} \nonumber \\ & & \times \!\!\int\limits_{-\infty}^{\infty} \frac{\fdiff{s_1}}{2\pi} \frac{\eto{-\iu s_1\left[\xi_1-(C_{n1}/C_{n2})\xi_2\right]}}{2C_{n2}} \left( \prod\limits_{m \neq n} \frac{C_{n2}}{2 \iu D_{nm}} \frac{1}{s_1 \!+\! \iu \frac{C_{m2} - C_{n2}}{2D_{nm}}} \right)\cdot \label{eq:bivar_derivation_firstintegral} \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq178.png) (61)The remaining task is to calculate the second integral. For ease of notation, we will substitute s1 → s and introduce the new variables

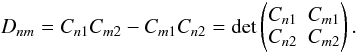

(61)The remaining task is to calculate the second integral. For ease of notation, we will substitute s1 → s and introduce the new variables  (62)Then, the second integral can be reduced to the calculation of the term

(62)Then, the second integral can be reduced to the calculation of the term  (63)This integral has poles at snm = −i βnm. For simple poles, the residues are

(63)This integral has poles at snm = −i βnm. For simple poles, the residues are  (64)Furthermore, the choice of contours is also very similar to the previous procedure. We will again close the contour with semi-circles in either the upper or the lower half-plane, and the purely imaginary poles snm = −i βnm lead, by the same convergence argument, to Heaviside factors H(αn)H( βnm) for the contour in the lower half-plane and H( − αn)H( − βnm) for the contour in the upper half-plane. With the usual winding numbers wn = ± 1, the integral is

(64)Furthermore, the choice of contours is also very similar to the previous procedure. We will again close the contour with semi-circles in either the upper or the lower half-plane, and the purely imaginary poles snm = −i βnm lead, by the same convergence argument, to Heaviside factors H(αn)H( βnm) for the contour in the lower half-plane and H( − αn)H( − βnm) for the contour in the upper half-plane. With the usual winding numbers wn = ± 1, the integral is ![\begin{eqnarray} S_n &=& 2 \pi \iu \sum\limits_n w_n {\rm Res}_{s_{nm}} \nonumber \\ &=& \sum\limits_{m \neq n} \left[ H(\alpha_n)H(\,\beta_{nm}) - H(-\alpha_n)H(-\beta_{nm}) \right] \frac{\iu^{-N+1} \eto{-\alpha_n \, \beta_{nm}}}{\underset{p \neq m}{\underset{p \neq n}{\prod }} \left( \, \beta_{np}-\beta_{nm} \right)} \cdot \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq187.png) (65)Reinserting this result into the full expression (61), the bivariate probability distribution function is

(65)Reinserting this result into the full expression (61), the bivariate probability distribution function is ![\begin{eqnarray} p(\xi_1,\xi_2)_N & =& \frac{(-1)^{N+1}}{2^N} \sum\limits_{n=1}^{N} \frac{C_{n2}^{N-2}}{ \prod\limits_{m \neq n} D_{nm}} \\[2mm] \nonumber && \times \sum\limits_{m \neq n} \mathfrak{H}_{nm} \frac{\exp\left(\frac{(C_{n2}-C_{m2})\xi_1+(C_{m1}-C_{n1})\xi_2}{2D_{nm}}\right)} {\underset{p \neq m}{\underset{p \neq n}{\prod }} \left( \, \beta_{np}-\beta_{nm} \right)} , \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq188.png) (66)where we used a shorthand notation for all of the Heaviside factors:

(66)where we used a shorthand notation for all of the Heaviside factors: ![\begin{eqnarray} \mathfrak{H}_{nm} &= & \ \left[ H(\xi_2)H(C_{n2}) - H(-\xi_2)H(-C_{n2}) \right] \nonumber \\[2mm] && \times \left[ H(\alpha_n)H(\,\beta_{nm}) - H(-\alpha_n)H(-\beta_{nm}) \right] . \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq189.png) (67)Finally, we can bring this expression to a more symmetric form by reinserting the βnm and shifting around some factors:

(67)Finally, we can bring this expression to a more symmetric form by reinserting the βnm and shifting around some factors:  (68)This derivation is only valid if, in both integrations, none of the poles vanish or are of higher order. But like we do for the univariate distribution in Appendix A, we can find corrections to get the most general result. In this case, they become rather unwieldy, and we will not present them in this article, since they can be easily avoided by choosing well-behaved power spectra and non-commensurable lag parameters. However, in Fig. 2 we show results for lag parameters which produce such zero modes. The corrections were implemented in the numerical code, as described in Sect. 4, and produce smooth, non-singular results.

(68)This derivation is only valid if, in both integrations, none of the poles vanish or are of higher order. But like we do for the univariate distribution in Appendix A, we can find corrections to get the most general result. In this case, they become rather unwieldy, and we will not present them in this article, since they can be easily avoided by choosing well-behaved power spectra and non-commensurable lag parameters. However, in Fig. 2 we show results for lag parameters which produce such zero modes. The corrections were implemented in the numerical code, as described in Sect. 4, and produce smooth, non-singular results.

Both panels show results for an one-dimensional field with a rather narrow Gaussian power spectrum, L σP = 20, since this allows for convergence with a small number of modes. N = 16 was used for the diagrams. The left panel shows the distribution for separations x1/L = 0.15 and x2 = 0. It is obvious that the distribution obeys the constraint |ξ(x1)| ≤ ξ(0), with zero probability outside the triangular region defined by this constraint. It is also clearly asymmetric in ξ1, demonstrating the non-Gaussianity. Marginalisations over either ξ1 or ξ2, shown in Fig. 3, also demonstrate the non-Gaussian nature of the bivariate distribution, and are consistent with our univariate results.

The right panel of Fig. 2 shows the case of x1/L = 0.2, x2/L = 0.25. Here, no constraints are visible, since we have not fixed any specific value of ξ0, so that the distribution is averaged over all realisations with arbitrary ξ0 and so both ξ1 and ξ2 are unbound. Still, the non-Gaussian nature is apparent in this case as well, since the isoprobability contours have kinks and straight segments following the prescription of the Heaviside terms.

|

Fig. 3 Univariate distributions obtained by marginalisation from the bivariate p(ξ1,ξ2), for a Gaussian power spectrum with L σP = 20, 16 modes and x/L = (0.15,0). Solid: p(ξ1), dashed: p(ξ2). |

3.2. Moments

There are analogues of mean, variance and also of higher moments for multivariate distributions. As we did in Sect. 2.2 for the univariate distribution, we can calculate them either by integrating the distribution function or by differentiating the characteristic function. Since two-dimensional integrals are more cumbersome, we restrict ourselves to the characteristic function approach for the bivariate moments.

The mean is now a vector, but calculation and result are quite similar to the univariate case in Eq. (25):  (69)For the central moments, the centralised characteristic function is again easy to obtain:

(69)For the central moments, the centralised characteristic function is again easy to obtain:  (70)The analogue of the variance in the bivariate case is the 2 × 2 covariance matrix, which we get from the second centralised moments:

(70)The analogue of the variance in the bivariate case is the 2 × 2 covariance matrix, which we get from the second centralised moments: ![\begin{eqnarray} \Sigma &=& \begin{pmatrix} \Sigma_{11} & \Sigma_{12} \\ \Sigma_{21} & \Sigma_{22} \end{pmatrix} = \left. \begin{pmatrix} -\partial_{1}^2 \psi_c(s_1,s_2) & -\partial_{1}\partial_{2} \psi_c(s_1,s_2) \\ -\partial_{2}\partial_{1} \psi_c(s_1,s_2) & -\partial_{2}^2 \psi_c(s_1,s_2) \end{pmatrix} \right|_{s_1=0=s_2} \nonumber \\[2.5mm] &=& \begin{pmatrix} 4 \sum\limits_{n=1}^{\infty} C_{n1}^2 & 4 \sum\limits_{n=1}^{\infty} C_{n1} C_{n2} \\ 4 \sum\limits_{n=1}^{\infty} C_{n1} C_{n2} & 4 \sum\limits_{n=1}^{\infty} C_{n2}^2 \end{pmatrix} . \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq204.png) (71)Higher central moments Mck could be obtained by building tensors of rank k from the components

(71)Higher central moments Mck could be obtained by building tensors of rank k from the components  (72)

(72)

3.3. Higher multivariate distributions

In this work, we have derived both univariate and bivariate distribution functions, but no multivariate distributions of more than two correlation functions. In principle, however, these could be obtained by exactly the same type of derivation. From the general multivariate characteristic function, Eq. (56), the k-variate distribution function can in general be obtained by solving the k integrals in  (73)Since the integration was already quite complicated for k = 2, this is not very practical for higher multivariates.

(73)Since the integration was already quite complicated for k = 2, this is not very practical for higher multivariates.

However, since the multivariate characteristic function is not very complicated and can be computed directly from the input power spectrum, an alternative approach would be to calculate this numerically on a grid in s space, and then do a numerical complex-to-real Fourier transform on these values to obtain p(ξ) on a corresponding grid in ξ space.

This approach could be quite efficient for practical purposes, where the likelihood function is only needed at discrete points anyway. However, the efficiency and stability of this approach depends strongly on the input Cnm factors, since the denominator in the integrand might prove to be numerically hard to handle. Estimating the performance of such a calculation would require further studies.

4. Numerical implementation

The numerical implementation of the probability distribution function, Eq. (22), or of the cumulative distribution function, Eq. (42), as required for a Bayesian parameter estimation or even for simply plotting the functions, is not trivial. For a small number of modes and benign parameters (number of dimensions, power spectrum, lag parameter), this can be done straightforwardly in any computer numerics system. In general, however, the summation of many terms with very different orders of magnitude (due to the exponentials and the product factors) leads to a high demand in numerical accuracy. If the internal accuracy of the software is less than the number of significant digits in the summands, rounding and addition errors lead to uncontrollable errors in p(ξ) and all related quantities.

We solved this problem by using the arprec package (Bailey et al. 2002), available for C, C++ and Fortran, which allows calculations with up to 1000 decimal digits. The higher the ratio of separation and field size or the number of significant modes, the higher the necessary precision. However, a large working precision significantly increases the run-time for each evaluation of the likelihood function. Therefore, for a fast and stable implementation suitable for parameter estimation, a way has to be found to determine the necessary precision beforehand.

For plotting the distribution as a function of ξ, we only need to compute the product factors once. But in a parameter estimation, the likelihood is evaluated for a different power spectrum in each step, and so an efficient computation of the product factors is also necessary. We will describe one such possibility in Sect. 5.2, which, however, comes with its own numerical issues. Further investigation into efficient and stable implementations seems necessary.

5. Discussion

5.1. Analytic properties

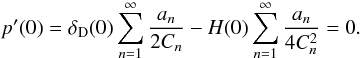

The probability distribution function, Eq. (22), has some interesting analytic properties. For lag parameters x ≠ 0, the distribution (as the full infinite sum) is non-vanishing both for positive and negative ξ, and infinitely differentiable at all points. However, there are some subtleties when the sum gets truncated. We can most easily see this for the special case of x = 0, where all Cn are positive, and we obtain  (74)with the an defined as in Eq. (34). Thus, we have p(ξ) > 0 for ξ > 0 and p(ξ) = 0 for ξ ≤ 0. The first derivative is

(74)with the an defined as in Eq. (34). Thus, we have p(ξ) > 0 for ξ > 0 and p(ξ) = 0 for ξ ≤ 0. The first derivative is  (75)with the Dirac delta function

(75)with the Dirac delta function  . At ξ = 0, we have

. At ξ = 0, we have  (76)For the last step, we had to define 0·δD(0) = 0, and to use the equality

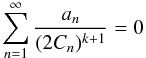

(76)For the last step, we had to define 0·δD(0) = 0, and to use the equality  (77)for k = 0 and k = 1, which can be proven by the same argument used for the normalisation in Sect. 2.2, equating two different forms of the characteristic function ψ(s) and then taking the kth derivative in s.

(77)for k = 0 and k = 1, which can be proven by the same argument used for the normalisation in Sect. 2.2, equating two different forms of the characteristic function ψ(s) and then taking the kth derivative in s.

So far, we have seen that p(0) = 0 and p′(0) = 0. If we consider the full infinite sum for p(ξ), the same is true for all derivatives ![\begin{equation} p^{(k)}(\xi) = \deriv[k]{p(\xi)}{\xi} = H(\xi) \sum\limits_{n=1}^{\infty} \eto{-\xi/(2C_n)} \frac{(-1)^k a_n}{(2C_n)^{k+1}} + (\dots) , \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq227.png) (78)where (... ) stands for terms proportional to δD(x) and its derivatives: we find that p(ξ) can be differentiated infinitely often at all points along the real axis, with all derivatives vanishing at ξ = 0. But, if the sum is truncated at some arbitrary mode number N, this is no longer true. In this case, only the first N − 1 derivatives will vanish at the origin, and higher derivatives will be discontinuous at this point, making p(ξ) differentiable only N − 1 times. This also holds for x ≠ 0: a sum truncated at N modes is only N − 1 times continuously differentiable. Still, this phenomenon does not harm the convergence of the sum, and the truncated function can still be used as a good approximation of the full sum if N is chosen sufficiently large, as we have demonstrated numerically.

(78)where (... ) stands for terms proportional to δD(x) and its derivatives: we find that p(ξ) can be differentiated infinitely often at all points along the real axis, with all derivatives vanishing at ξ = 0. But, if the sum is truncated at some arbitrary mode number N, this is no longer true. In this case, only the first N − 1 derivatives will vanish at the origin, and higher derivatives will be discontinuous at this point, making p(ξ) differentiable only N − 1 times. This also holds for x ≠ 0: a sum truncated at N modes is only N − 1 times continuously differentiable. Still, this phenomenon does not harm the convergence of the sum, and the truncated function can still be used as a good approximation of the full sum if N is chosen sufficiently large, as we have demonstrated numerically.

However, it is of mathematical interest to note that even the infinite sum version of Eq. (74) has peculiar properties at ξ = 0. If we consider the function p(ξ) on the complex plane, the directional derivatives anywhere but on the real line will not vanish, while they do along the real line. Thus, the function is not analytic in any neighbourhood of ξ = 0, and therefore the origin is an essential singularity of this function. This phenomenon can also be seen in the special case discussed in Sect. 2.4, where  (79)The elliptic theta function shares just this analytic behaviour. Still, these functions are smooth for the purposes of real calculus, and there are no problems in practical applications of our results due to this phenomenon.

(79)The elliptic theta function shares just this analytic behaviour. Still, these functions are smooth for the purposes of real calculus, and there are no problems in practical applications of our results due to this phenomenon.

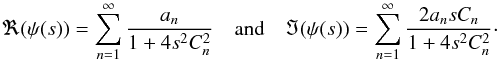

Also, discussion in the complex plane allows for another interesting result. Recalling the characteristic function from Eq. (35), which is in general a complex function, we have  (80)Obviously, the real part is an even function in s, while the imaginary part is odd. Considering the back transform to the probability distribution function, we find

(80)Obviously, the real part is an even function in s, while the imaginary part is odd. Considering the back transform to the probability distribution function, we find ![\begin{eqnarray} p(\xi) &=& \int\limits_{-\infty}^{\infty} \frac{\fdiff{s}}{2\pi} \eto{-\iu s \xi} \psi(s) \nonumber \\ &=& \int\limits_{-\infty}^{\infty} \frac{\fdiff{s}}{2\pi} \left[\cos(s\xi) - \iu \sin(s\xi)\right] \left[ \Re\left( \psi(s) \right) + \iu \Im\left( \psi(s) \right) \right] \nonumber \\ &=& \int\limits_{-\infty}^{\infty} \frac{\fdiff{s}}{2\pi} \left[\cos(s\xi)\Re\left(\psi(s)\right) + \sin(s\xi)\Im\left(\psi(s)\right)\right] \nonumber \\ & &+ \iu \int\limits_{-\infty}^{\infty} \frac{\fdiff{s}}{2\pi} \left[ \cos(s\xi) \Im\left( \psi(s) \right) - \sin(s\xi) \Re \left( \psi(s) \right) \right] . \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq233.png) (81)Therefore, the real part of p(ξ) contains an even-even and an odd-odd term, whereas the imaginary part consists of two even-odd terms, which vanish under the integration. This way, we have proven that the probability distribution function is indeed purely real, a fact that was not immediately evident from the original derivation, which had the complex characteristic function as an intermediate step.

(81)Therefore, the real part of p(ξ) contains an even-even and an odd-odd term, whereas the imaginary part consists of two even-odd terms, which vanish under the integration. This way, we have proven that the probability distribution function is indeed purely real, a fact that was not immediately evident from the original derivation, which had the complex characteristic function as an intermediate step.

Going to the bivariate distribution function, we find analytical issues similar to those in the univariate case. If both lag parameters are non-zero, p(ξ1,ξ2) is non-zero in the full (ξ1,ξ2) plane and smooth everywhere. If, however, one of the ξi = 0, then the probability density is strictly zero outside the boundaries defined by the constraint inequality |ξ(x)| ≤ |ξ(0)|, with the function going smoothly to zero at these boundaries, in the sense that the real directional derivatives (partial derivatives along unit vectors) vanish when crossing the boundaries. However, we have only checked this smoothness numerically, since an analytical calculation of the derivatives would become rather convoluted.

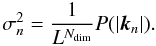

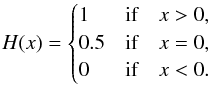

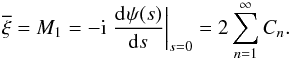

|

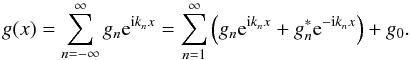

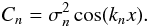

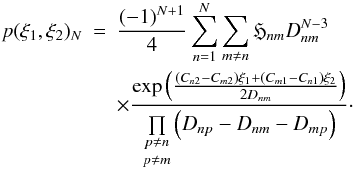

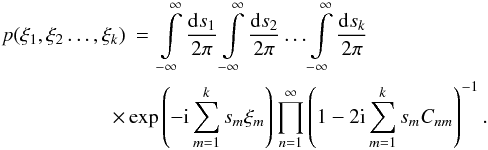

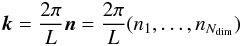

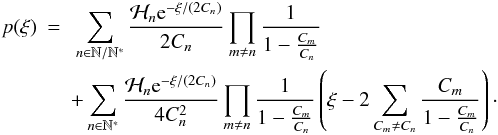

Fig. 4 Edgeworth expansions compared to full analytical distributions, for Gaussian power spectra. Left panel: x = 0, L σP = 20, N = 16; solid: analytical, dashed: 0-order Edg., dotted: 3rd order Edg., dash-dotted: 4th order Edg. Right panel: x/L = 0.5, L σP = 100, N = 32; solid: analytical, dashed: 0-order Edg., dotted: 3rd order Edg., dash-dotted: 6th order Edg. |

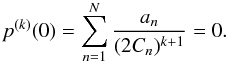

5.2. Alternative calculation of product factors

In principle, the distribution function (22) is simply a weighted sum of exponentials. However, the weights are given by the products  (82)These make the summation complicated, since closed-form analytic expressions exist in special cases only, and high accuracy is needed to compute them numerically. Therefore, we were looking for alternative ways to calculate these products, and found an approach by a linear set of equations (LSE).

(82)These make the summation complicated, since closed-form analytic expressions exist in special cases only, and high accuracy is needed to compute them numerically. Therefore, we were looking for alternative ways to calculate these products, and found an approach by a linear set of equations (LSE).

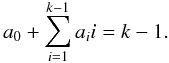

We obtain the first equation of this system from the normalisation, as calculated in Sect. 2.2:  (83)For the remaining equations, we consider again the smoothness of the distribution function at the origin. As already discussed in Sect. 5.1, the first N − 1 derivatives of p(ξ) with respect to ξ yield the equations

(83)For the remaining equations, we consider again the smoothness of the distribution function at the origin. As already discussed in Sect. 5.1, the first N − 1 derivatives of p(ξ) with respect to ξ yield the equations  (84)Then, we can consider the N factors an as the components of a vector a = (a1,a2,...,aN). Combining this with another vector b = (1,0,0,...,0) with N entries and with the N × N coefficient matrix M of the above equations, we obtain the LSE

(84)Then, we can consider the N factors an as the components of a vector a = (a1,a2,...,aN). Combining this with another vector b = (1,0,0,...,0) with N entries and with the N × N coefficient matrix M of the above equations, we obtain the LSE  (85)We can then solve this LSE by any of the usual methods. In particular, we applied the Singular Value Decomposition (SVD) as implemented in the GNU Scientific Library (GSL, Galassi et al. 2009). This implementation has limited precision and therefore runs into the same numerical problems described in Sect. 4. However, SVD solutions for many numerically challenging problems exist, and therefore the general approach seems promising.

(85)We can then solve this LSE by any of the usual methods. In particular, we applied the Singular Value Decomposition (SVD) as implemented in the GNU Scientific Library (GSL, Galassi et al. 2009). This implementation has limited precision and therefore runs into the same numerical problems described in Sect. 4. However, SVD solutions for many numerically challenging problems exist, and therefore the general approach seems promising.

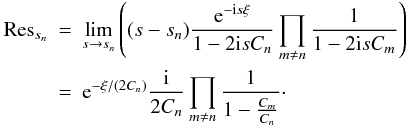

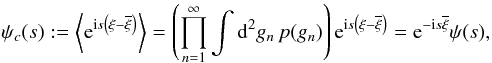

|

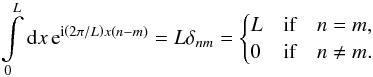

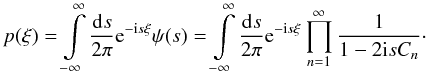

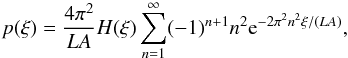

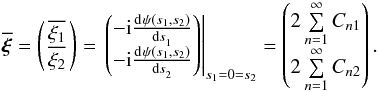

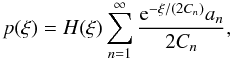

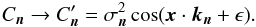

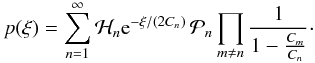

Fig. 5 Univariate distributions for a power-law power spectrum and different separation vectors x, demonstrating isotropy in 2D. Left panel: dashed: x/L = (0.3,0), dotted: x′/L ≈ (0.212,0.212), right panel: dashed: x/L = (0.03,0), dotted: x′/L ≈ (0.0212,0.0212). |

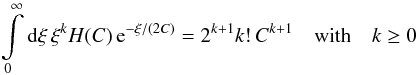

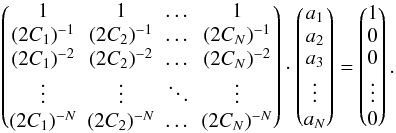

5.3. Edgeworth expansion

Since the moments have simpler expressions (Eqs. (25) and (28) to (32)) than the probability distribution function itself, it seems promising to express the distribution in terms of its moments. One way to do so is the Edgeworth asymptotic expansion, described in Blinnikov & Moessner (1998). It has the form ![\begin{eqnarray} \mathbf{p}\left(\mathbf{\sigma\xi+\overline{\xi}}\right)& =& \frac{\eto{-\xi^2/2}}{\sqrt{2\pi}\sigma} \left[ 1 + \sum\limits_{n=1}^{\infty} \sigma^n \sum\limits_{\{k_m\}} \frac{{\rm He}_{n+2r}(\xi)}{k_m!} \right. \label{eq:edgeworth} \\ && \left. \times \prod\limits_{m=1}^n \left( \frac{\kappa_{m+2}}{\sigma^{2m+2}(m+2)!} \right)^{k_m} \right] \nonumber \end{eqnarray}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq250.png) (86)with the (probabilist’s) Hermite polynomials Hen, and

(86)with the (probabilist’s) Hermite polynomials Hen, and  and σ as given in Sect. 2.2. The inner sum runs over all sets { km } of non-negative integers solving the Diophantine equation

and σ as given in Sect. 2.2. The inner sum runs over all sets { km } of non-negative integers solving the Diophantine equation  (87)and we also defined, for each such set,

(87)and we also defined, for each such set,  (88)We calculate the { km } and r with the algorithm presented in Appendix C of Blinnikov & Moessner (1998).

(88)We calculate the { km } and r with the algorithm presented in Appendix C of Blinnikov & Moessner (1998).

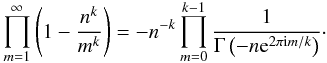

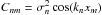

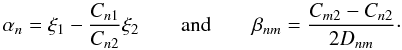

The first term in the Edgeworth expansion is a simple Gaussian, and the higher order terms are given by the cumulants κn of the distribution in question, which we can derive from the central moments Mc,n as  (89)In Fig. 4, we demonstrate the performance of the Edgeworth expansion in two examples, both for Gaussian power spectra. The left panel shows a distribution at zero lag, with L σP = 20 and 16 modes. The Edgeworth term of order zero, a Gaussian with the same mean and variance as the full distribution, is a very bad fit in this case. The third-order Edgeworth expansion is the best fit, fitting the peak of the distribution almost perfectly and the tail decently well, while also producing negative probabilities for some ξ < 0. Adding additional terms only makes the approximation worse, distorting it in the high probability region. For the right panel, we used x/L = 0.5, L σP = 100 and N = 32. For this more symmetric distribution, the Gaussian is already a better fit; still, the third order Edgeworth expansion fits even better at the peak. Higher orders again begin to deviate, as evidenced by the strongly two-peaked sixth-order expansion.

(89)In Fig. 4, we demonstrate the performance of the Edgeworth expansion in two examples, both for Gaussian power spectra. The left panel shows a distribution at zero lag, with L σP = 20 and 16 modes. The Edgeworth term of order zero, a Gaussian with the same mean and variance as the full distribution, is a very bad fit in this case. The third-order Edgeworth expansion is the best fit, fitting the peak of the distribution almost perfectly and the tail decently well, while also producing negative probabilities for some ξ < 0. Adding additional terms only makes the approximation worse, distorting it in the high probability region. For the right panel, we used x/L = 0.5, L σP = 100 and N = 32. For this more symmetric distribution, the Gaussian is already a better fit; still, the third order Edgeworth expansion fits even better at the peak. Higher orders again begin to deviate, as evidenced by the strongly two-peaked sixth-order expansion.

The reason for this behaviour is that, for the higher terms, the Edgeworth expansion assigns high weights to the tails of the distribution. Since the distribution we are analysing is in fact far from Gaussian, especially in the tails, the fit to the peak correspondingly gets worse, and often even multiple peaks appear. This is similar to the effect noticed by Blinnikov & Moessner (1998) for the example of χ2 distributions. Also, the regions of negative probability density are generic features of the Edgeworth expansion when applied to strongly non-Gaussian distributions.

Still, if we truncate the Edgeworth expansion after a wisely chosen number of terms, it will provide a good approximation to the true probability distribution. Since Eq. (86) is an asymptotic series expansion, it generally does not converge, but we do have a method at hand to find the optimal number of terms and to control the error we make. If we truncate the sum over n in Eq. (86) after N terms, the last term retained will also give the order of the difference between the full p(ξ) and this partial sum expansion. Therefore we can, in practice, simply truncate the expansion at the term with minimal contribution (measured at the peak of the distribution, at a certain point of evaluation, or integrated over a domain in ξ we are interested in).

For both cases illustrated in Fig. 4, this criterion gives N = 3 as the optimal order of expansion. Also for other parameters x/L and L σP, such a third-order Edgeworth expansion seems to be a safe choice to get a substantial improvement as opposed to a simple Gaussian likelihood.

5.4. Higher dimensions

In all of the above calculations, we assumed one-dimensional random fields. However, we can easily generalise all results to higher dimensions. If we go to Ndim dimensions, with lag parameters x = (x1,...,xNdim), then the allowed Fourier modes are all  (90)with integer ni. Still, all the modes are independent and each has a Gaussian probability distribution with its dispersion given by

(90)with integer ni. Still, all the modes are independent and each has a Gaussian probability distribution with its dispersion given by  . The derivation of p(ξ) stays exactly the same as presented in Sect. 2.1. Where necessary, we can renumber all modes n with a single integer n by an arbitrary scheme and retain the old notations with scalar indices. Then, Eq. (22) still holds, with only two important changes.

. The derivation of p(ξ) stays exactly the same as presented in Sect. 2.1. Where necessary, we can renumber all modes n with a single integer n by an arbitrary scheme and retain the old notations with scalar indices. Then, Eq. (22) still holds, with only two important changes.

First, the sums now go over many more modes, namely all possible vectors of integers n = (n1,...,nNdim) with ni ∈ N. If, in a numerical implementation, we want to cover a box in k-space with N grid points in each direction, we therefore end up with NNdim modes. Besides the increased computational cost, this also leads to frequent occurrences of multiple poles. This is the case especially for x = 0, since then the Cn depend on |n| only. Therefore, the generalised result of Appendix A gets naturally important in higher dimensions. However, in real application scenarios, where accuracy is limited by external factors anyway, it is always possible to avoid multiple poles by slightly changing the Cn factors, e.g. by adding a small number ϵ in the cosine,  (91)Doing this removes the multiple poles while only slightly changing the results with respect to using the unmodified Cn and the full multi-pole formula, Eq. (A.3).

(91)Doing this removes the multiple poles while only slightly changing the results with respect to using the unmodified Cn and the full multi-pole formula, Eq. (A.3).

As a second effect, the factors Cn now depend on the angle between separation vector x and mode vector kn:  (92)However, a Gaussian random field is completely determined by its power spectrum, and when we assume a P(kn) which depends on the absolute values of the kn only, such a field should be statistically isotropic. Any anisotropies seen in our results must be a consequence of using a finite, cubic field instead of an infinite field. So we expect that all anisotropies vanish as soon as most of the power comes from scales much smaller than the field size. For power spectra concentrated narrowly towards k = 0, i.e. those with only a few significant modes, the low-k, large-scale modes will dominate for any finite field size. But for a wide power spectrum, where many small-scale modes contribute, a sufficiently large field size should lead to approximate isotropy.

(92)However, a Gaussian random field is completely determined by its power spectrum, and when we assume a P(kn) which depends on the absolute values of the kn only, such a field should be statistically isotropic. Any anisotropies seen in our results must be a consequence of using a finite, cubic field instead of an infinite field. So we expect that all anisotropies vanish as soon as most of the power comes from scales much smaller than the field size. For power spectra concentrated narrowly towards k = 0, i.e. those with only a few significant modes, the low-k, large-scale modes will dominate for any finite field size. But for a wide power spectrum, where many small-scale modes contribute, a sufficiently large field size should lead to approximate isotropy.

This is demonstrated for a two-dimensional field with a power spectrum P(k) = k-2 in Fig. 5. Both panels show the probability distributions for two separation vectors x and x′ of the same length, but rotated by 45°. (Or, more precisely, with x = (x1,0) and x′ = (x1cos(45° + ϵ),x1cos(45° − ϵ)), with ϵ = 10-10, to avoid double poles.) For a large separation to field size ratio, |x|/L = 0.3, seen in the left panel, the distributions for different separation vectors are quite different, while for |x|/L = 0.03, in the right panel, they have almost converged. Note that, for P(k) = k-2 in 2 dimensions, the field size L cancels out of the σn, and therefore we could keep the power spectrum normalisation constant while changing |x|/L, without changing the scale of p(ξ).

Isotropy is even easier to obtain for power spectra that are small both for very small and very large k and large only at intermediate wavelengths, since then the low-k, large scale modes do not harm isotropy. The ΛCDM power spectrum in cosmology fulfils this condition, allowing isotropic N-body simulations with reasonable field sizes.

However, these are no fundamental changes, and the resulting distribution function has all the same properties as the one-dimensional version. So all our results can be readily applied to higher dimensions, as long as the computational difficulties can be handled.

6. Conclusions

We have considered the problem of accurate likelihood functions for Bayesian analyses of data from Gaussian random fields. Making use of Fourier mode expansions and characteristic functions, we have derived analytically the probability distribution function of the correlation function for a one-dimensional finite Gaussian random field. For general power spectra, we can only give a sum formula for the distribution function. However, for the special power spectrum P(k) ∝ k-2, we have found an explicit expression in terms of elliptic theta functions. We can also, for general power spectra, calculate arbitrary moments and cumulants of the distribution by much simpler sum expressions.

Then, we continued the analytical approach for bivariate distributions as well, and found a similar, but even more complicated sum formula as a result. We have also outlined a general procedure for calculating arbitrary high multivariate distributions, though this is not feasible in practice.

Furthermore, we have considered the analytical properties of the new probability distribution function, which are well understood and consistent with numerical results. We used the moments of the univariate distribution to construct an Edgeworth expansion, which is able to closely approximate the true distribution function in the region of highest likelihood, and therefore could be a useful replacement of simple Gaussian approximations in Bayesian analyses. Finally, we found that all our results easily generalise to multi-dimensional fields.

Considering possible future applications of these results, we have to point out the importance of improving the correlation function likelihood, as well as the further steps that are necessary for a practical implementation.

As already mentioned in the introduction, the Gaussian approximation for the likelihood can lead to considerable deviations in parameter estimation. For example, in cosmic shear studies, this leads to significantly reduced accuracy (Hartlap et al. 2009). Similar effects are to be expected in other fields where correlation functions are used.

However, the work presented in this article has so far been purely mathematical, and the results are not readily applicable to real data. The main obstacle lies in the infeasibility of analytical calculations for higher multivariate distributions. If data of the correlation function over N bins needs to be analysed, we would need the full N-variate distribution function. Therefore, we expect that a numerical approach, as by Wilking & Schneider (in prep.), is best suited for practical computations. Still, their “quasi-Gaussian” approach makes direct use of the analytical univariate distribution function presented in this article. We also expect that the analytical results will yield important guidance and cross-checks for future numerical implementations.

We also note that our analytical results depend on the assumption of a Gaussian random field, whereas a lot of cosmological data probes the evolved density field on small scales, which is far from Gaussian. Nevertheless, applications for the analytical likelihood function could be found on very large scales, or in cosmic microwave background analysis, since the density fluctuations at that epoch were still either Gaussian or close to Gaussian. Furthermore, this work could also be relevant to fields outside of cosmology, for example in the common problem of time series analysis of Gaussian random processes.

Acknowledgments

We thank Jan Hartlap, Cristiano Porciani and Philipp Wilking for their help, comments and discussion during this project. This work was supported by the Deutsche Forschungsgemeinschaft under the project SCHN 342/11–1. D. Keitel was also supported by the Bonn-Cologne Graduate School of Physics and Astronomy.

References

- Bailey, D. H., Hida, Y., Li, X. S., & Thompson, O. 2002, ARPREC: An arbitrary precision computation package, Tech. Rep. [Google Scholar]

- Blinnikov, S., & Moessner, R. 1998, A&AS, 130, 193 [NASA ADS] [CrossRef] [EDP Sciences] [PubMed] [Google Scholar]

- Cox, D. 1962, Renewal theory, Methuen’s monographs on applied probability and statistics (London: Methuen) [Google Scholar]

- Fu, L., Semboloni, E., Hoekstra, H., et al. 2008, A&A, 479, 9 [Google Scholar]

- Galassi, M., Davies, J., Theiler, J., et al. 2009, GNU scientific library: reference manual, 3rd ed. (Bristol: Network Theory) [Google Scholar]

- Hammarwall, D., Bengtsson, M., & Ottersten, B. 2008, IEEE Transact. Signal Proc., 56, 1188 [NASA ADS] [CrossRef] [Google Scholar]

- Hartlap, J., Schrabback, T., Simon, P., & Schneider, P. 2009, A&A, 504, 689 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Kendall, M., & Stuart, A. 1977, The advanced theory of statistics, Distribution theory, 4th ed. (London: Charles Griffith & Company), vol. 1 [Google Scholar]

- Okumura, T., Matsubara, T., Eisenstein, D. J., et al. 2008, ApJ, 676, 889 [NASA ADS] [CrossRef] [MathSciNet] [Google Scholar]

- Prudnikov, A. P., Brychkov, Y. A., & Marichev, O. I. 1986, Integrals and Series, Elementary Functions (New York: Gordon & Breach), vol. 1 [Google Scholar]

- Sato, M., Ichiki, K., & Takeuchi, T. T. 2011, Phys. Rev. D, 83, 023501 [NASA ADS] [CrossRef] [Google Scholar]

- Schneider, P., & Hartlap, J. 2009, A&A, 504, 705 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Seljak, U., & Bertschinger, E. 1993, ApJ, 417, L9 [NASA ADS] [CrossRef] [Google Scholar]

- Whittaker, E. T., & Watson, G. N. 1963, A course of modern analysis, 4th ed. (Cambridge University Press) [Google Scholar]

Appendix A: Multiple poles

So far, to keep calculations simple, we have considered simple poles only. However, it is entirely possible to have multiple poles in the characteristic function, i.e. to have some mode numbers m ≠ n with Cm = Cn. This could happen if

-

the power spectrum is non-monotonic, withP(kn) = P(km) for some m ≠ n;

-

in higher dimensions, several different modes n have identical absolute value |kn|;

-

one of the lag parameters is commensurable with

and thus produces periodicity in cos(x·kn).

and thus produces periodicity in cos(x·kn).

From now on, we will use a scalar index n running over all modes n by some arbitrary numbering scheme. If a multiple pole of order k is present for some set of modes, which we will call  , we can calculate the residue as

, we can calculate the residue as ![\appendix \setcounter{section}{1} \begin{equation} {\rm Res}_{s_n} = \tfrac{1}{N_n(k-1)!} \lim\limits_{s \rightarrow s_n} \pderiv[k-1]{}{s} \left( \frac{(s-s_n)^k \eto{-\iu s \xi}}{\left(1-2 \iu s C_n\right)^k} \prod\limits_{C_m \neq C_n} \frac{1}{1-2 \iu s C_m} \right) , \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq303.png) (A.1)where the weight Nn is the number of modes in

(A.1)where the weight Nn is the number of modes in  . We can then rewrite p(ξ) as the usual sum, limited to single pole modes, plus correction terms for the multiple poles. For example, when there are some double poles N ∗ and all other modes have single poles, the full expression reads

. We can then rewrite p(ξ) as the usual sum, limited to single pole modes, plus correction terms for the multiple poles. For example, when there are some double poles N ∗ and all other modes have single poles, the full expression reads  (A.2)For general types of poles, we can absorb all multi-pole contributions in a pole order correction factor

(A.2)For general types of poles, we can absorb all multi-pole contributions in a pole order correction factor  , so that we can write the probability distribution function compactly as

, so that we can write the probability distribution function compactly as  (A.3)If the nth mode belongs to a set of poles of order k, its correction factor is

(A.3)If the nth mode belongs to a set of poles of order k, its correction factor is ![\appendix \setcounter{section}{1} \begin{equation} \label{eq:univar_derivation_pxinpole} \mathcal{P}(k) = \frac{1}{k!(2C_n)^k} \left( \sum\limits_{ \left\{ a_i \right\} } A_{\left\{ a_i \right\}} \xi^{a_0} \prod\limits_{i=1}^{k-1} \left[ \sum\limits_{C_m \neq C_n} \left(\frac{C_m}{1-\frac{C_m}{C_n}}\right)^i \right]^{a_i} \right) , \end{equation}](/articles/aa/full_html/2011/10/aa17284-11/aa17284-11-eq311.png) (A.4)

(A.4)

Pole order correction factors.

where the outer sum goes over all sets of integers ai that fulfil  (A.5)This expression was extrapolated from explicit calculation of up to quintuple poles. The prefactors A{ai} obtained in these calculations are given in Table A.1. We still have to find a general expression for these prefactors, so that we can also give

(A.5)This expression was extrapolated from explicit calculation of up to quintuple poles. The prefactors A{ai} obtained in these calculations are given in Table A.1. We still have to find a general expression for these prefactors, so that we can also give  for k > 5.

for k > 5.

. Recently, it was brought to our attention (thanks to Stefan Hilbert) that a related distribution has long been known in